You can easily get ChatGPT to run about shooting stuff

If you give it doom, running in Matlab

You may find yourself living in a shotgun shack. And you may find yourself working with GPT-4. And you may ask yourself, "Will GPT-4 run DOOM?" And you may ask yourself, "Am I right? Am I wrong?"

Adrian de Wynter, a principal applied scientist at Microsoft and a researcher at the University of York in England, posed these questions in a recent research paper, "Will GPT-4 Run DOOM?"

Alas, GPT-4, a large language model from Microsoft-backed OpenAI, lacks the capacity to execute DOOM's source code directly.

But its multimodal variant, GPT-4V, which can accept images as input as well as text, exhibits the same endearing sub-competence playing DOOM as the fraught text-based models that have launched countless AI startups.

"Under the paper's setup, GPT-4 (and GPT-4 with vision, or GPT-4V) cannot really run Doom by itself, because it is limited by its input size (and, obviously, that it probably will just make stuff up; you really don't want your compiler hallucinating every five minutes)," wrote de Wynter in an explanatory note about his paper. "That said, it can definitely act as a proxy for the engine, not unlike other 'will it run Doom?' implementations, such as E. Coli or Notepad."

That is to say, GPT-4V won't run DOOM like a John Deere tractor but it will play DOOM without specific training.

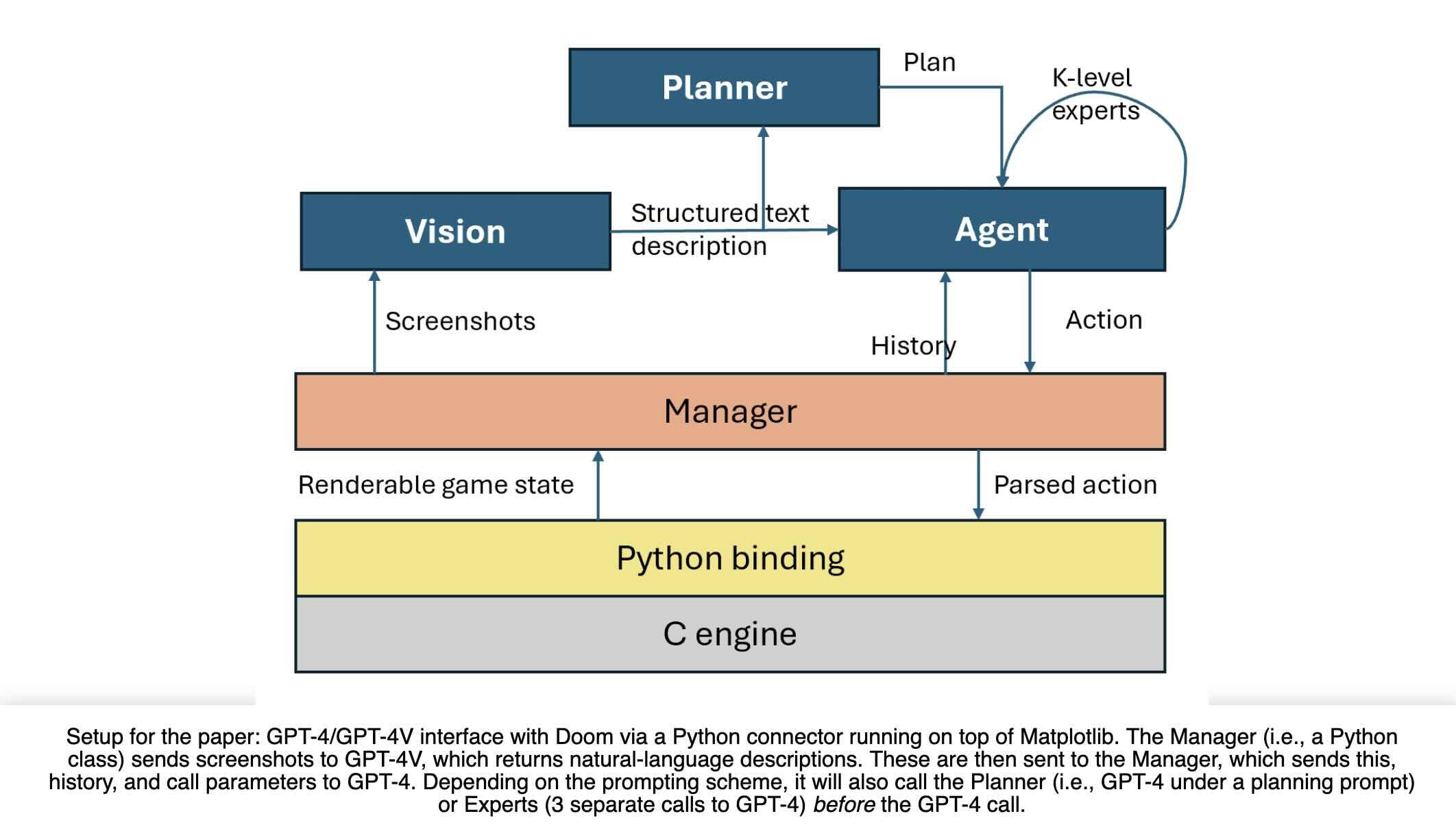

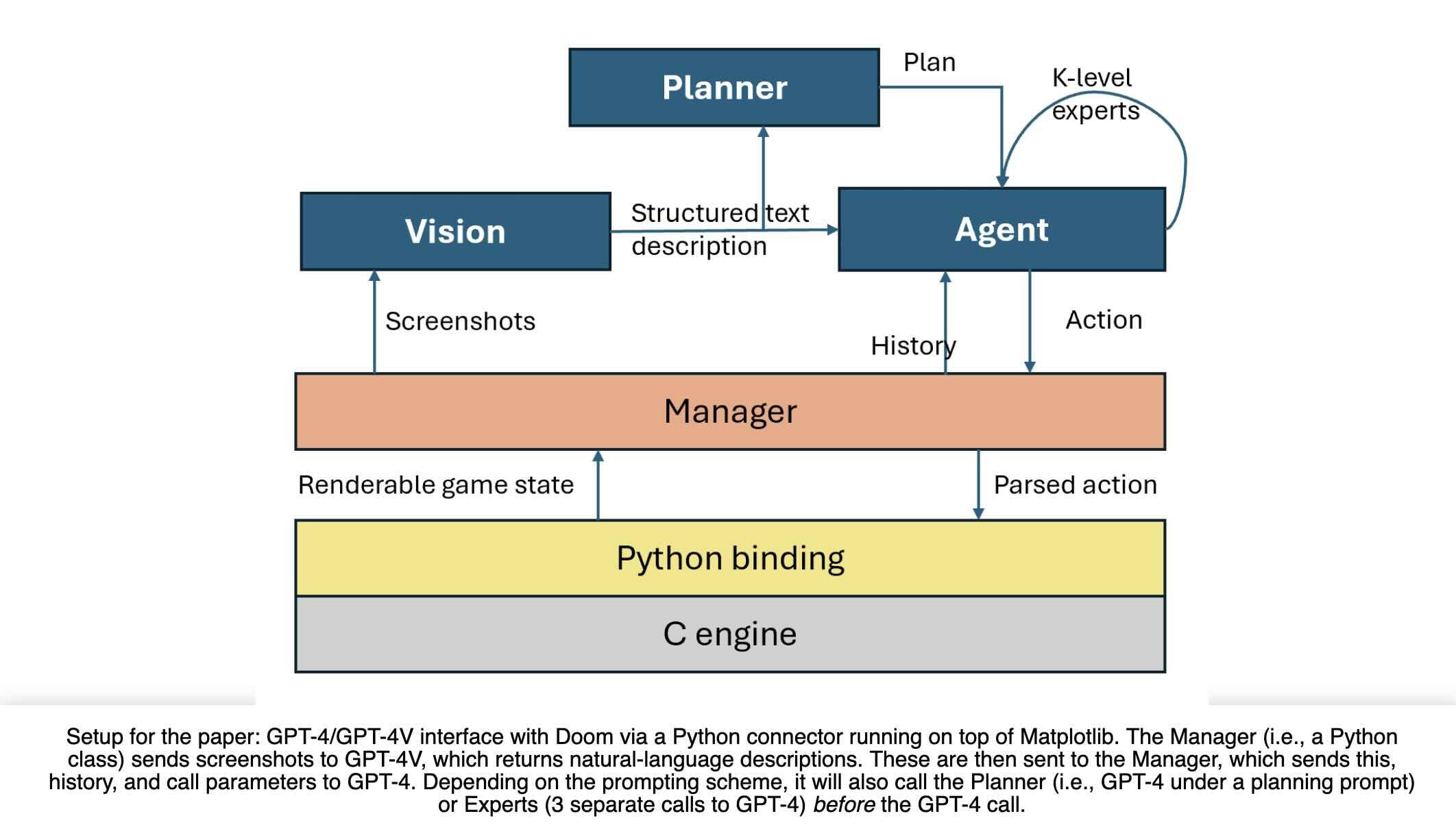

To manage this, de Wynter designed a Vision component that calls GPT-4V, which captures screenshots from the game engine and returns structure descriptions of the game state. And he combined that with an Agent model that calls GPT-4 to make decisions based on the visual input and previous history. The Agent model has been told to translate its responses into keystroke commands that have meaning to the game engine.

Interactions are handled through a Manager layer consisting of an open source Python binding to the C Doom engine running on Matplotlib.

De Wynter nonetheless considers it remarkable that GPT-4 is capable of playing DOOM without prior training.

At the same time, he finds that troubling.

"On the ethics department, it is quite worrisome how easy it was for (a) me to build code to get the model to shoot something; and (b) for the model to accurately shoot something without actually second-guessing the instructions," he wrote in his summary post.

"So, while this is a very interesting exploration around planning and reasoning, and could have applications in automated video game testing, it is quite obvious that this model is not aware of what it is doing. I strongly urge everyone to think about what deployment of these models [implies] for society and their potential misuse."

If you give it doom, running in Matlab

You may find yourself living in a shotgun shack. And you may find yourself working with GPT-4. And you may ask yourself, "Will GPT-4 run DOOM?" And you may ask yourself, "Am I right? Am I wrong?"

Adrian de Wynter, a principal applied scientist at Microsoft and a researcher at the University of York in England, posed these questions in a recent research paper, "Will GPT-4 Run DOOM?"

Alas, GPT-4, a large language model from Microsoft-backed OpenAI, lacks the capacity to execute DOOM's source code directly.

But its multimodal variant, GPT-4V, which can accept images as input as well as text, exhibits the same endearing sub-competence playing DOOM as the fraught text-based models that have launched countless AI startups.

"Under the paper's setup, GPT-4 (and GPT-4 with vision, or GPT-4V) cannot really run Doom by itself, because it is limited by its input size (and, obviously, that it probably will just make stuff up; you really don't want your compiler hallucinating every five minutes)," wrote de Wynter in an explanatory note about his paper. "That said, it can definitely act as a proxy for the engine, not unlike other 'will it run Doom?' implementations, such as E. Coli or Notepad."

That is to say, GPT-4V won't run DOOM like a John Deere tractor but it will play DOOM without specific training.

To manage this, de Wynter designed a Vision component that calls GPT-4V, which captures screenshots from the game engine and returns structure descriptions of the game state. And he combined that with an Agent model that calls GPT-4 to make decisions based on the visual input and previous history. The Agent model has been told to translate its responses into keystroke commands that have meaning to the game engine.

Interactions are handled through a Manager layer consisting of an open source Python binding to the C Doom engine running on Matplotlib.

De Wynter nonetheless considers it remarkable that GPT-4 is capable of playing DOOM without prior training.

At the same time, he finds that troubling.

"On the ethics department, it is quite worrisome how easy it was for (a) me to build code to get the model to shoot something; and (b) for the model to accurately shoot something without actually second-guessing the instructions," he wrote in his summary post.

"So, while this is a very interesting exploration around planning and reasoning, and could have applications in automated video game testing, it is quite obvious that this model is not aware of what it is doing. I strongly urge everyone to think about what deployment of these models [implies] for society and their potential misuse."