Hello all,

as you might know I've sunk an unreasonable amount of time into improving the AI performance in combat. This is the so called "Tactical AI". Basically it works a little bit like a chess engine now; it simulates different moves and tries to score the outcome. With lots of units involved, we quickly end up with thousands of options. Now the question is how long to search, because this has both a runtime impact and a quality impact.

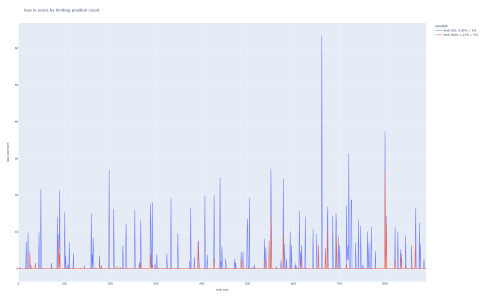

Here's a diagram showing some numbers from in a test game.

How to read this? I used a very high limit for the search depth without regards for performance. Then I checked which "good" scores we would miss out on if we were to use a lower search depth.

Summary:

* with a limit of 200 (which I propose for Chieftain level) the best moves are more than 5% worse than the best known moves in 11.3% of the simulations

* with a limit of 2000 (which I propose for Emperor level) the best moves are more than 5% worse than the best known moves in 1.3% of the simulations

* most of the time there is in fact zero difference (because only a few units are involved)

* in very rare cases (<20 out of 7000) the difference is greater than 50%

* it's not clear that a better score always corresponds to better moves, the scoring is not perfect, so take everything with a grain of salt

You will be able to customize the search depth with the TacticalSimMaxCompletedPositions parameter in DifficultyMod.xml - but note that in the current version the numbers are different, this is only a preview for the next release!

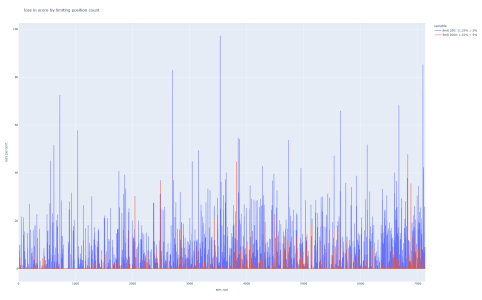

Here's another result with a bit shorter duration, only 900 simulations:

as you might know I've sunk an unreasonable amount of time into improving the AI performance in combat. This is the so called "Tactical AI". Basically it works a little bit like a chess engine now; it simulates different moves and tries to score the outcome. With lots of units involved, we quickly end up with thousands of options. Now the question is how long to search, because this has both a runtime impact and a quality impact.

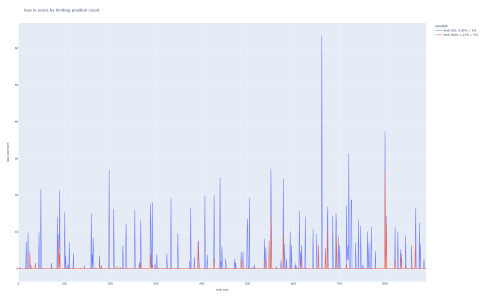

Here's a diagram showing some numbers from in a test game.

How to read this? I used a very high limit for the search depth without regards for performance. Then I checked which "good" scores we would miss out on if we were to use a lower search depth.

Summary:

* with a limit of 200 (which I propose for Chieftain level) the best moves are more than 5% worse than the best known moves in 11.3% of the simulations

* with a limit of 2000 (which I propose for Emperor level) the best moves are more than 5% worse than the best known moves in 1.3% of the simulations

* most of the time there is in fact zero difference (because only a few units are involved)

* in very rare cases (<20 out of 7000) the difference is greater than 50%

* it's not clear that a better score always corresponds to better moves, the scoring is not perfect, so take everything with a grain of salt

You will be able to customize the search depth with the TacticalSimMaxCompletedPositions parameter in DifficultyMod.xml - but note that in the current version the numbers are different, this is only a preview for the next release!

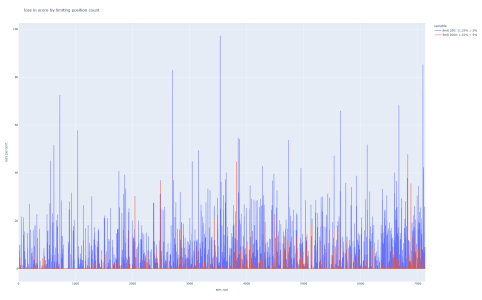

Here's another result with a bit shorter duration, only 900 simulations: