Read before voting:

The poll is unlimited choice. Vote for anything that should have further regulation/criminalisation. Do not vote for things that should have less regulation/criminalisation. No neutrals are allowed, the status quo is not acceptable, you have to vote one way or another (until CFC adds multi option questions).

Intro

There are a number of efforts around the world to criminalise some aspects of computing technology, development processes or those who create them. Many of these are new laws or novel applications of existing laws. Which areas do you think should have more regulation Alternatively are there areas that should have less regulation?

Here are some areas that have attracted attention recently. There is a lot of overlap, both in the politics/morality and in the maths/technology but those overlaps are frequently different so I hope this way of splitting them up halps.

Encryption

Many countries are looking at banning end to end encryption. In the US we have the EARN IT act, in the UK we have the online safety bill. The really worrying thing about these bill is for them to have got as far as they have got one ofa fw things must be true:

Cryptocurrency/blockchain

There are lots of moves to criminalise Cryptocurrency and blockchain tech. We have actual fraud against the customers such as FTX, we have criminalisation of software programs and their developers in the case of Tornado Cash. We have attempts to make all token holders legally responsible for the actions of DAOs. We have people who call for their criminalisation from an environmental standpoint.

On that latter, looking for references to their viewpoints I googled "cryptocurrency protest organisation" and the point for them becomes clear. For me at least all the hits are protest organisations using cryptocurrency to get around government surveillance and attempts to silence their organisations. In a world where physical cash is giving way to electronic transfers I think the world needs a way to do that without governments being able to read and potentially write those transactions at will.

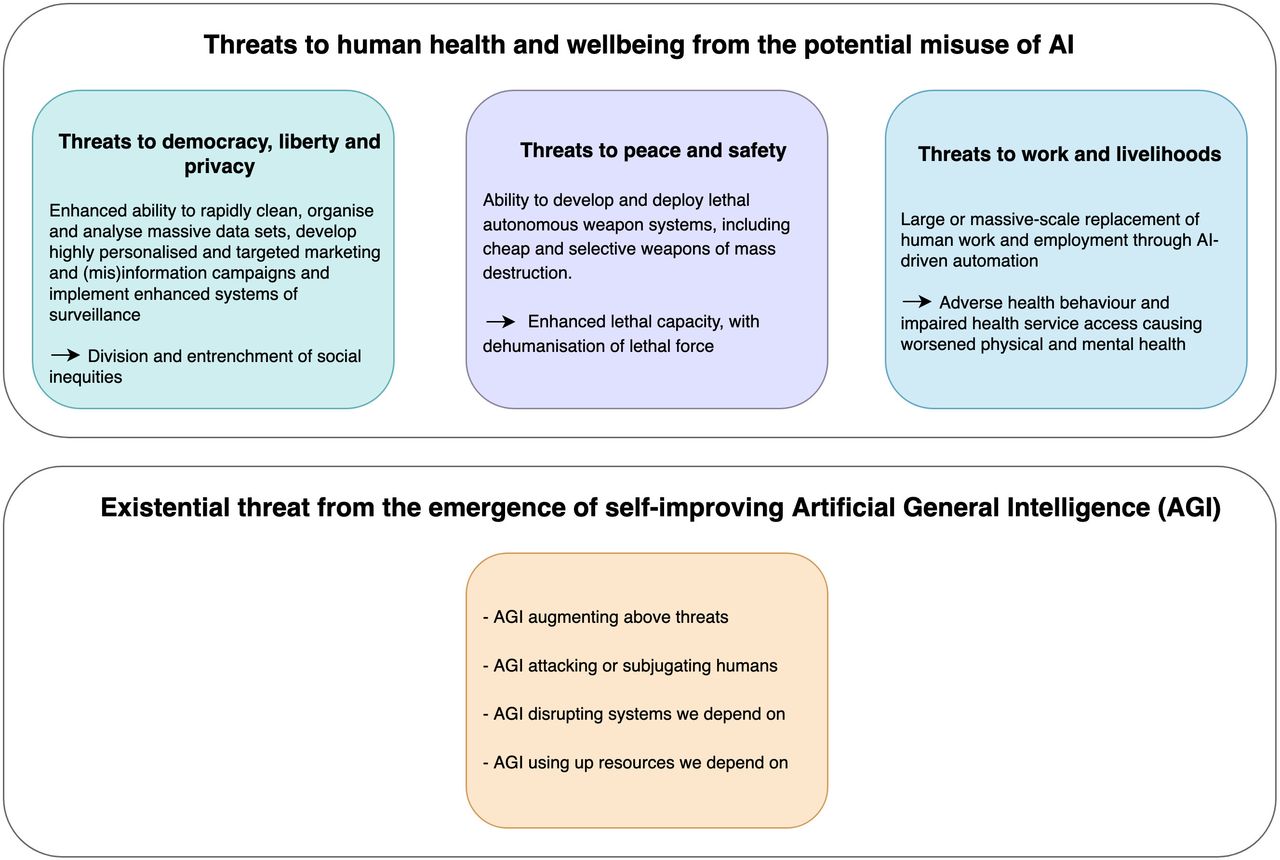

Artificial Intelligence

This is actually this article in the Grundiad that prompted the thread. It has four specific complaints that I can address:

Hallucination #1: AI will solve the climate crisis

Nature, search "climate machine learning" sort date. Two published today, including a model improving agricultural production in the face of climate change. Yesterday three, including one modelling the antarctic ocean and another "Optical tuner for sustainable buildings". You cannot expect machines to convince politician and the public when decades of science has not, but those scientists are using AI to refine their models and come up with mitigations. This is a good thing.

Hallucination #2: AI will deliver wise governance

They quote Boston Consulting Group. This is just a joke, anyone who believed this was always deluding themselves.

Hallucination #3: tech giants can be trusted not to break the world

No, totally not. AI cannot be controlled by big tech and we can never trust them. Check out the open source tools, google is afraid of them.

Hallucination #4: AI will liberate us from drudgery

Just like the spinning jenny AI will allow some jobs to be automated. That will "liberate" the people who currently do those jobs from both drudgery and their jobs. The alternative, renouncing the use of such tech did not work well for those countries that did not use the spinning jenny in their textile industries and is not likely to work well for those who do not use AI in any industry it can improve productivity.

Personally Identifiable Information processing

The UK and EU have the GDPR, though it is largely ignored other than maximising the popup pain we have to go through. The California Consumer Privacy Act (CCPA) is similar I believe though I know nothing about it or if enforcement is a thing.

I think a big issue on the horizon that is not covered by the GDPR is household use. Currently this is not covered, so you can do what you like as long as you keep it within the house. The new AI tools combined with many peoples propensity to post PII online, perhaps combined with more traditional network analysis tools will put a lot of people's lives into everyone's hands. As I understand it this is completely unregulated.

Product liability

The EU is pushing the European Cyber Resilience Act (“CRA”). I am not an expert, but I think it means you cannot deny liability for harm caused by someone elses your software even if you declare it not fit for purpose. As I understand it, if you write a bit of code (say a Civ MOD) and release it freely under the GLP which specifically denies any presumption of fitness of purpose and someone uses that in a car and it blows up, you can be held liable. I do not think that is a good thing.

Anonymity

There are calls for banning anonymity online. The UK Govs page says "Main social media firms will have to give people the power to control who can interact with them, including blocking anonymous trolls". Now I totally agree that the lack of control that people have over what they are shown is an issue. If what they are taking about is a "Only show twitter blue users" then I do not think we need a law for that. If they are talking about legislating users have fully control of what they are shown I am all for that. I think we all know what they really want though, and it is more about tracking their enemies than "thinking of the children".

The Dark Web

Dark Web marketplaces are under constant DOS attack. It is impossible to know who is doing it, but those with both the most incentive and opportunity have three letter abbreviations and tax payer funding. I do not know what the environmental and social impact of this is, but I suspect it is significant and many of those here are paying for it. Should we be doing this, or perhaps more?

Algorithms

Canada's Online Streaming Act, commonly known as Bill C-11 I think allows the regulator to control what the big tech social media algorithms feed people, with the assumption they will use it to increase the consumption of Canadian content as they do with radio (and TV?). It is an interesting one, I cannot imagine how it would work but I would love to hear opinions.

Death Robots

The development of remote and autonomous weapons has advanced greatly, with Türkiye becoming a world leader. Are some lines we should not be crossing as a species, such as autonomous decision making? I kind of think the cat is out of the bag on that one, but is there another line we could try and not cross?

The poll is unlimited choice. Vote for anything that should have further regulation/criminalisation. Do not vote for things that should have less regulation/criminalisation. No neutrals are allowed, the status quo is not acceptable, you have to vote one way or another (until CFC adds multi option questions).

Intro

There are a number of efforts around the world to criminalise some aspects of computing technology, development processes or those who create them. Many of these are new laws or novel applications of existing laws. Which areas do you think should have more regulation Alternatively are there areas that should have less regulation?

Here are some areas that have attracted attention recently. There is a lot of overlap, both in the politics/morality and in the maths/technology but those overlaps are frequently different so I hope this way of splitting them up halps.

Encryption

Many countries are looking at banning end to end encryption. In the US we have the EARN IT act, in the UK we have the online safety bill. The really worrying thing about these bill is for them to have got as far as they have got one ofa fw things must be true:

- The whole of the the senior government is completely unable to understand the principles behind encryption and are unwilling to listen to anyone that understands it

- They know it will never work without an authoritarian criminalisation of the tools that you are using to view this message and so know it will fail but that will help them because reasons

- They know it will never work without an authoritarian criminalisation of the tools that you are using to view this message and so expect to use authoritarian criminalisation of the tools that you are using to view this message

Cryptocurrency/blockchain

There are lots of moves to criminalise Cryptocurrency and blockchain tech. We have actual fraud against the customers such as FTX, we have criminalisation of software programs and their developers in the case of Tornado Cash. We have attempts to make all token holders legally responsible for the actions of DAOs. We have people who call for their criminalisation from an environmental standpoint.

On that latter, looking for references to their viewpoints I googled "cryptocurrency protest organisation" and the point for them becomes clear. For me at least all the hits are protest organisations using cryptocurrency to get around government surveillance and attempts to silence their organisations. In a world where physical cash is giving way to electronic transfers I think the world needs a way to do that without governments being able to read and potentially write those transactions at will.

Artificial Intelligence

This is actually this article in the Grundiad that prompted the thread. It has four specific complaints that I can address:

Hallucination #1: AI will solve the climate crisis

Nature, search "climate machine learning" sort date. Two published today, including a model improving agricultural production in the face of climate change. Yesterday three, including one modelling the antarctic ocean and another "Optical tuner for sustainable buildings". You cannot expect machines to convince politician and the public when decades of science has not, but those scientists are using AI to refine their models and come up with mitigations. This is a good thing.

Hallucination #2: AI will deliver wise governance

They quote Boston Consulting Group. This is just a joke, anyone who believed this was always deluding themselves.

Hallucination #3: tech giants can be trusted not to break the world

No, totally not. AI cannot be controlled by big tech and we can never trust them. Check out the open source tools, google is afraid of them.

Hallucination #4: AI will liberate us from drudgery

Just like the spinning jenny AI will allow some jobs to be automated. That will "liberate" the people who currently do those jobs from both drudgery and their jobs. The alternative, renouncing the use of such tech did not work well for those countries that did not use the spinning jenny in their textile industries and is not likely to work well for those who do not use AI in any industry it can improve productivity.

Personally Identifiable Information processing

The UK and EU have the GDPR, though it is largely ignored other than maximising the popup pain we have to go through. The California Consumer Privacy Act (CCPA) is similar I believe though I know nothing about it or if enforcement is a thing.

I think a big issue on the horizon that is not covered by the GDPR is household use. Currently this is not covered, so you can do what you like as long as you keep it within the house. The new AI tools combined with many peoples propensity to post PII online, perhaps combined with more traditional network analysis tools will put a lot of people's lives into everyone's hands. As I understand it this is completely unregulated.

Product liability

The EU is pushing the European Cyber Resilience Act (“CRA”). I am not an expert, but I think it means you cannot deny liability for harm caused by someone elses your software even if you declare it not fit for purpose. As I understand it, if you write a bit of code (say a Civ MOD) and release it freely under the GLP which specifically denies any presumption of fitness of purpose and someone uses that in a car and it blows up, you can be held liable. I do not think that is a good thing.

Anonymity

There are calls for banning anonymity online. The UK Govs page says "Main social media firms will have to give people the power to control who can interact with them, including blocking anonymous trolls". Now I totally agree that the lack of control that people have over what they are shown is an issue. If what they are taking about is a "Only show twitter blue users" then I do not think we need a law for that. If they are talking about legislating users have fully control of what they are shown I am all for that. I think we all know what they really want though, and it is more about tracking their enemies than "thinking of the children".

The Dark Web

Dark Web marketplaces are under constant DOS attack. It is impossible to know who is doing it, but those with both the most incentive and opportunity have three letter abbreviations and tax payer funding. I do not know what the environmental and social impact of this is, but I suspect it is significant and many of those here are paying for it. Should we be doing this, or perhaps more?

Algorithms

Canada's Online Streaming Act, commonly known as Bill C-11 I think allows the regulator to control what the big tech social media algorithms feed people, with the assumption they will use it to increase the consumption of Canadian content as they do with radio (and TV?). It is an interesting one, I cannot imagine how it would work but I would love to hear opinions.

Death Robots

The development of remote and autonomous weapons has advanced greatly, with Türkiye becoming a world leader. Are some lines we should not be crossing as a species, such as autonomous decision making? I kind of think the cat is out of the bag on that one, but is there another line we could try and not cross?