Well I don't have time to keep trying to teach you anything.

Go look it up. As for the other patrons here...

Wow, that's incredibly rude. Luckily, I don't need you to teach me anything... because my *actual job* is optimizing games for multi-core architectures. Now of course, we all have things we can learn, myself included, but having done it for years, I feel I can say without arrogance that this is something I'm both very knowledgeable about and quite good at.

I should probably assume from your tone that there's no point in me trying to argue this further, but I will try anyway, because, well, you never know.

You posted a long list of titles that don't benefit from hyperthreading, and actually perform worse with it. I do not dispute that this is the case. But none of those games are well-optimized for large core counts, so are, by definition, not hyperthread-friendly.

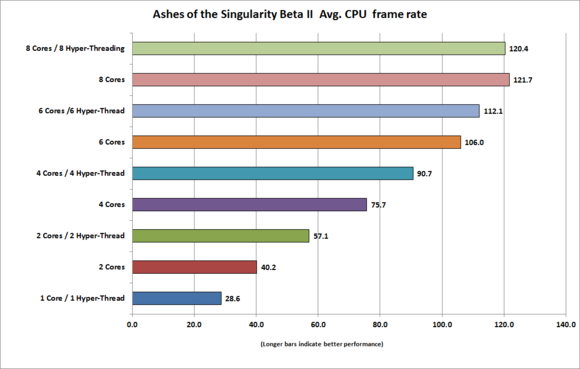

Here is a benchmark that actually demonstrates the effect of hyperthreading:

As you can see, once a certain number of cores (8) is reached, there is no benefit from hyper-threading, because there is no more work to be distributed, and lo and behold, the performance is worse with hyperthreading turned on.

But at every other core count, the performance is *significantly* better, tapering off somewhat at 6 cores, likely because there is a bottleneck thread.

At 8 cores, that bottleneck thread is holding everything back.

For all the games you've listed (without exception) there is a bottleneck thread holding the game back from better performance *at the core count tested*.

The simplest example of this is a 1-thread game. It *cannot* perform better on a 2-core, or on a 1-core /w hyperthreading. However, a 2-thread game is *guaranteed* to perform better on a 1-core w/ hyperthreading. (Assuming both threads do a significant amount of work)

The reality of most games is that there is a single thread that is by far the most expensive. Once you expose that thread (put it on a core by itself) it's impossible for performance to improve with more cores, or with hyper-threading.

With the advent of the X360 & PS3, (a 1-core & a 3-core, both with hyper-threading) developers started to really focus on splitting that bottleneck thread into two threads. (Main & Render)

I won't get into the mess that was PS3 development, but 360 developers often went a step further, adding a physics thread, a particle thread, a network thread, and there are a large number of games that utilize all the hyperthreads on the 360.

It is a fact that 360 games which fully utilized all available hyper-threads would not have run anywhere near as fast with hyper-threading turned off.

With the advent of the XB1 and PS4, even more titles started targeting 6 cores. Neither of those platforms are hyper-threaded, because AMD is super-late to the party. Their first hyper-threaded chip, the Zen, will launch in Q1 2017.

AMD's decision not to go with hyper-threading almost put them out of business. But go ahead, believe it doesn't help.

The reason I keep mentioning consoles is that multi-platform games get the most optimization attention on console, simply because it is usually the worst performer. If the CPU performance of a title is worse on a person's PC than on console, it's a *really* crappy CPU.

So, XB1 and PS4 have pushed more and more games to be 6-core friendly. However, rendering thread performance is almost always the bottleneck. So devs started focusing on multi-threaded rendering.

The push for this was driven largely by console, so naturally what you see is that most games don't benefit from more than 6 logical cores. What this means is that a 3-core w/ Hyperthreading is about the most that a lot of games really need. Also, a lot of developers didn't bother to make the PC version multithreaded at all, because the XB1 and PS4 are so underpowered relative to a modern PC.

Which is why an i7-920 is still relevant. It is comparable, if not faster, than a XB1 or PS4, and has more logical cores available to developers. So no one has been targeting anything better.

To recap: There is one simple reason why games often don't benefit from hyper-threading. They're usually poorly multi-threaded. One thread is usually such a bottleneck that it runs slower than all other threads combined, meaning that a 2-core machine without hyperthreading is all you need.

But that is changing as we speak.

DX12 in particular is changing that. DX11 helped. Civ6, even with only 2 render threads (I assume) in DX11 mode, is already one of the most multi-core friendly games ever made (if the benchmark tool can be believed) and it still has a lot of room for improvement, as I mentioned earlier in the thread. This trend will only continue.

So, yes, unfortunately, most existing games don't benefit much from large core counts, whether they be logical (Hyper-thread) or physical cores. But that is because the game itself was not optimized.

Every game that is multi-core friendly benefits dramatically from hyper-threading until a certain core count is reached.

This is not an opinion. This is a fact.

However, although your conclusions were wrong, we actually agree on many things you said. As I mentioned, I only switched from an i7-920 this year, because this was the first year that having more than 8 logical cores helped *any game*. Civ Beyond Earth didn't, despite using Mantle. It only really used 6 logical cores if I recall. Rise of the Tomb Raider (DX12) and Doom are the first games that I've seen benefit from it.

Not that many games took advantage of Mantle, Doom is really the first optimized Vulkan game, and RoTT was one of the first optimized DX12 games. (AotS the other obvious one)

So, yes, in the past, it was often true that turning on hyper-threading hurt performance, but only because those games didn't need the extra cores. But, going forward, this is changing. You'll see soon enough.

It's a chicken-and-the-egg thing. Developers won't target 8-core/16HT machines as long as people who have them are in the minority. People won't buy them until they help performance. Hence, XB1 and PS4 have largely driven multi-core utilization.

But right now, this is changing. Now that *most* PC owners have 8+ logical cores, it will quickly become a competitive disadvantage for a title to not take advantage of them. Now that Windows 10 is starting to take over, DX12 has enough momentum to justify developers targeting it.

Now, if you're still not convinced, I'll do some benchmarking when I have time, with my 12-core/24HT, with a variety of configurations (# of cores enabled, hyper-threading enabled, etc.)

I'll even make a little chart, showing definite evidence of just exactly how much hyper-threading (at each core count) helps Civ6 performance.

Cheers,

Cro