Core Imposter

Deity

- Joined

- May 13, 2011

- Messages

- 4,534

We've already had a lawyer jammed up when he used AI to find citations (that turned out to be bogus) in support of one of his cases. Now we have another case with totally made-up book reviews. What, exactly, are they training the AI with?

news.yahoo.com

news.yahoo.com

Gemini spat out summaries of four negative reviews, ostensibly from the Washington Free Beacon, The New York Times, The New York Times Book Review and Wired, including quotes from each:

But here’s the catch. None of those reviews were real. Not one. And neither were any of the quotes.

- "Book review by Matthew Continetti in The Washington Free Beacon: This review criticizes the book for relying on ‘anecdotal evidence’ and ‘cherry-picking examples’ to support its claims.

- Book review by Emily Bazelon in The New York Times Book Review: This review notes that the book 'lacks a deep understanding of how these companies work' and relies on 'unproven accusations.'

- Article by Emily Dreyfuss in Wired: This article explores the ‘dearth of evidence’ for the book's claims about Big Tech bias and highlights examples of cherry-picking.

- Article by Ben Smith in The New York Times: This article discusses the book's lack of rigorous research and its reliance on 'unsubstantiated claims.'"

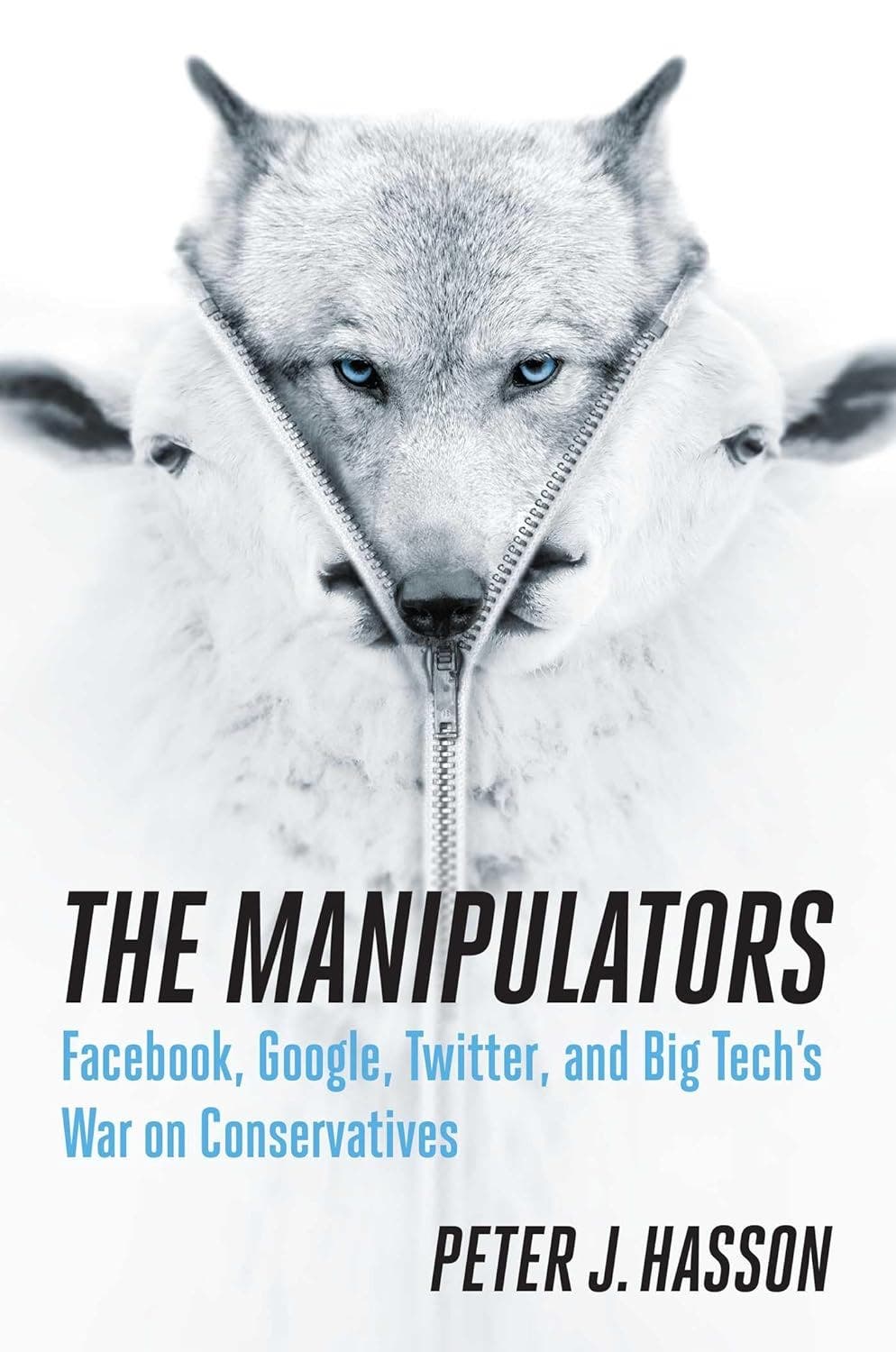

Google Gemini invented fake reviews smearing my book about Big Tech’s political biases

Google's new AI chatbot, Google Gemini, tried to discredit my 2020 book on political biases at Google and other big tech companies by inventing several fake reviews.