The game industry is dominated by the big names for reasons. The server market is dominated by OS, and I bet most of the AI training is done using the linux kernel at least. OS is also dominant in the mobile market, to an extent.The open source dynamic will proceed in parallel, I can agree with this. My view is that centralised AI process will be more efficient in attracting, by way of renumeration, best specialists. Therefore, centralised process will remain dominant in terms of market share. The same dynamic can be observed in other forms of software development. There is a thriving game mod community, but it’s the centralised dev studios, which rake in most of consumer cash. Corporations can use patents, censorship, legislation to protect their interest and various pathways on the way to their interest. Opensource community can not, by definition.

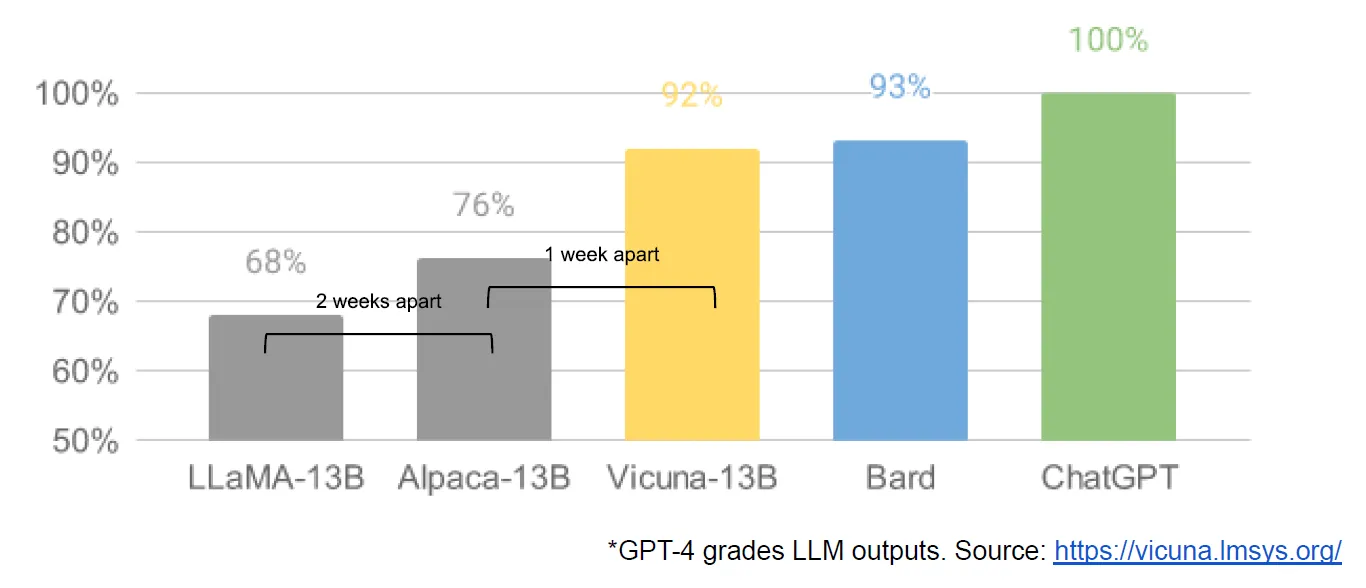

And yeah, you’re absolutely right about a big push in open source. I am not big on the subject myself, but specialist programmers I watched on yt mention that many open source alternatives are nearly as good as gpt4

The OS community totally can use copyright to protect themselves, that is what the GPL does. All the big ones are released under the apache ATM, which does not, but that could change. I think the world should have learned from the macOS thing about the danger of that.

Useful til. (unless the "especially" part is also an active ingredient in the definition)

Useful til. (unless the "especially" part is also an active ingredient in the definition)