The current trend of AI is not geared towards research or scientific breakthroughs. It's more geared towards implementation in a expanding range of consumer products and services and its presence will become less and less obvious. A bit like some of those apps that come pre-installed on everyone's smartphone, but very few actually are aware exist. By 2030, consumer grade AI will be integrated everywhere and we won't even notice it is there.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Warfare

- Thread starter Birdjaguar

- Start date

Akka

Moody old mage.

Pretty interesting stuff here, thank you.In that case I think we'll probably agree when I say that fundamentally I feel we aren't. There is nothing that would make a human mind somehow magically superior to a hypothetical machine mind of equal capability. Just as there is nothing that would make us inherently superior to an alien or an uplifted animal.

I just do not think that the current generative AI approach is going to ever produce such a mind due to the inherent technical limitations of its fundamental methods of operation.

It's difficult to explain in English for me. But I'll try.

Basically what distinguishes what generative AI does from what we do is that we operate in the field of metainformation where it does not. This allows us to operate in basically a higher order space than generative AI.

Fundamentally, the pattern recognition part of intelligence is pretty much the same with both of us in that we both create patterns where there are none based on statistical correlation in order to form what we in human terms call "concepts". Where concept is something I define as the categorization of things into groups defined by the phrase "I can't explain it but I know it when I see it."

However, the difference is that humans have a higher order awareness of the fact we are doing this where as the generative AI approach is fundamentally incapable of producing a system that has such an awareness. So where as both human and AI both can operate within a single concept to formulate output based on data AI can not than take the extra step to take multiple concepts and use them as building blocks for something higher.

That is why you will see AI producing text that looks like human speech but can actually be complete nonsense. There is for example a famous legal case in america where the lawyers asked AI to find them cases to confirm their legal requests and the AI just made stuff up. That AI output is perfectly valid within the confines of the concept that the AI was designed for, that being speech. It's just invalid in the broader concept group of speech + law which the AI was unable to comprehend.

And fundamentally it is my professional opinion that the generative statistics driven learning approach is fundamentally incapable of producing anything beyond that because the very way it is designed is with a deep fundamental focus on mastering one concept based on a sea of data. If we wanted to create a true General AI we would need to fundamentally change some of the principals of design at play.

Which is not to say that the current approach is not a stepping stone toward that. It absolutely is. But it's a stepping stone in the same sense that the arrow was one to the assault rifle. The process won't be one of derivation as much as inspiration and taking the principals learned by operating the one and applying them to the other without a direct evolution of the actual products.

I hope that made mostly sense.

I understand your point about the inner working of AI being for now too, well, "low-level" let's say, to become a true "intelligence".

I do think though, that we'll get surprised one day by a system that will become "intelligent" in a manner that we don't expect, due to our limited understanding of what is intelligence and the inherent difficulty to grasp a fundamentally "alien" way of thinking that such system will have. I just hope we'll be already prepared when it happens.

Intelligence or awareness is imo a result of evolution throughout Millions of years. Having awareness increases our odds for survival and that is basically what evolution always strives towards.

I don't believe that proces can be replicated by just developing powerful semiconductors and adding advanced algorithms to the mix. Why would awareness or intelligence suddenly manifest itself inside a machine or piece of software?

I don't believe that proces can be replicated by just developing powerful semiconductors and adding advanced algorithms to the mix. Why would awareness or intelligence suddenly manifest itself inside a machine or piece of software?

Remorseless1

Emperor

- Joined

- Jan 24, 2022

- Messages

- 1,214

Drones and AI are not as revolutionary as some proclaim. The game changer was the development of rocketry (V-1 and V-2). Missiles have increased in effectiveness ever since. Linking missiles with laser targeting systems in the 1950s and 1960s accelerated this.

History has shown that every time a military develops a new tactic or weapon, their opponents eventually find an effective counter. Tanks over machine guns, AT missiles over tanks, etc. The Macedonian phalanx crushed Persian armies twice their size. But after being defeated by Pyrus' phalanx, the Romans developed sword warmed legions that were more maneuverable and basically ended the phalanx. Then the mobile horse archers of Persia whipped the legions. Same old story.

To sum up, humans will always find new ways to kill other people.

History has shown that every time a military develops a new tactic or weapon, their opponents eventually find an effective counter. Tanks over machine guns, AT missiles over tanks, etc. The Macedonian phalanx crushed Persian armies twice their size. But after being defeated by Pyrus' phalanx, the Romans developed sword warmed legions that were more maneuverable and basically ended the phalanx. Then the mobile horse archers of Persia whipped the legions. Same old story.

To sum up, humans will always find new ways to kill other people.

^ Agree with your post. Just want to add the little known detail that von Braun didn't invent modern rocketry with his V designs; the American Robert Goddard did. The Americans and Brits were already experimenting with similar designs to what von Braun came up with; he just did it better than they were able to.

PPQ_Purple

Purple Cube (retired)

- Joined

- Oct 11, 2008

- Messages

- 5,764

Honestly, I don't think we will. If anything I think that if AGI does happen it will happen due to a deliberate, difficult and directed effort to create it. It's just a thing that's too complex and has far too many moving parts for it to appear on accident.Pretty interesting stuff here, thank you.

I understand your point about the inner working of AI being for now too, well, "low-level" let's say, to become a true "intelligence".

I do think though, that we'll get surprised one day by a system that will become "intelligent" in a manner that we don't expect, due to our limited understanding of what is intelligence and the inherent difficulty to grasp a fundamentally "alien" way of thinking that such system will have. I just hope we'll be already prepared when it happens.

banzay13

Emperor

It would be interesting to watch.As said, it's going to be an interesting century to watch. Honestly I am holding off to see if that american program for a new air dominance fighter that's more like a heavy bomber commanding a drone swarm ever gets developed. Because if that thing happens well than we are really in the age of RTS warfare in the air.

If.

You have a rancho with big walls and machinguns in every corner

Moriarte

Immortal

- Joined

- May 10, 2012

- Messages

- 2,692

Honestly, I don't think we will. If anything I think that if AGI does happen it will happen due to a deliberate, difficult and directed effort to create it. It's just a thing that's too complex and has far too many moving parts for it to appear on accident.

Intelligence did appear on accident at least once already.

AGI will be the next level of intelligence on the way up the ladder - supercharged intelligence. The ability to perform every task human performs, better than human. Or, at the very least, calculating prerequisites for efficiently performing a task. Performing tasks is a matter of computation, efficiency, energy. In matters concerning intelligence, there is nothing divine or unknowable about counting beans in one manner or another. This rings true in science. Science = scientific method*time. Whether the end product will be a superior intelligence to human intelligence - I think this question is irrelevant. AI, whether external or internalised will be the amplification mechanism for a cyborg/superhuman. Some scientists, somewhere, probably, are going to experiment with autonomous intelligences. But how autonomous can they really be, when they are, like us, subject to physics, politics and other surrounding forces? The main point of AI is to amplify human, serve human. A human scientist, human soldier, musician, villain.... etc. A synthesis of instincts received at birth along with intelligence baggage accumulated over lifetime + AI to perform trillions of computations/sec to solve arising problems more efficiently.

Sometimes I hear longing for super intelligence, which will solve humanity's problems (or eradicate humans entirely, ha!) - same old religious mysticism with a high-tech cover. Super-intelligence will solve problems of the super wealthy. In tandem with the super wealthy.

A can also hear the chimes of mysticism when I hear that we never will solve glorified monkey's intelligence. Intelligence is logical apparatus + volume of knowledge. We can already emulate it a little, as a consequence of us gaining sufficient (but not complete) understanding of the concept of intelligence. Should we eventually give AI free will and let it loose with an AK strapped to it's back? - that is a million dollar question. To me the answer is resounding No with 10 year prison sentence if one decides to neglect (future) intergovernmental treaty on creation of autonomous agents.

What makes you think that such a 'super intelligence' would have any interest in performing any of the tasks you list?

Humans are relatively super intelligent. Yet humans are not known to focus their awesome mental capacity to solving issues facing other intelligent species we share this planet with.

Humans are relatively super intelligent. Yet humans are not known to focus their awesome mental capacity to solving issues facing other intelligent species we share this planet with.

Narz

keeping it real

Well natural selection programmed us to be selfish and petty and prone to distraction, fear, flattery, greed, etc but maybe we can do better.What makes you think that such a 'super intelligence' would have any interest in performing any of the tasks you list?

Humans are relatively super intelligent. Yet humans are not known to focus their awesome mental capacity to solving issues facing other intelligent species we share this planet with.

Without a 'self' machine 'intelligence' won't need an ego either. It won't need to defend something that doesn't exist and can serve the greater good without envy or self servingness.

Obviously 'the greater good' will be defined by us but ideally an awareness (for lack of a better term) superior to ours can make superior decisions.

AI won't be conscious but why would we want it to be? Because we're lonely, want to talk to our robot kitten?

Consciousness creates problems, better humans use AI tools to handle our own messy brains rather than trying to create new brains.

I think craving for computers to be more than just machine tools is the religious instinct in man, god is dead, but we're building a new daddy, love me daddy, see me, feel feelings w me.

Last edited:

Estebonrober

Deity

- Joined

- Jan 9, 2017

- Messages

- 6,062

Yea I'm not on the AI hype train (I've long considered the label a marketing device too) and the intelligence in the machines is not my concern, my concern is the morons behind the "intelligence" in AI... I can easily see them setting these stupid systems on opposed doomsday machines and erasing most, if not all of us on accident.The warning there is the giveaway not to take the article seriously. It's a plug to give more money to these AI scammers. They can't even design an "AI" that can safely drive a car through a city, remember.

You have to encase it in lead, pretty much.

This. The human brain does not solve problems by brute-force calculation as machine-learning systems do.

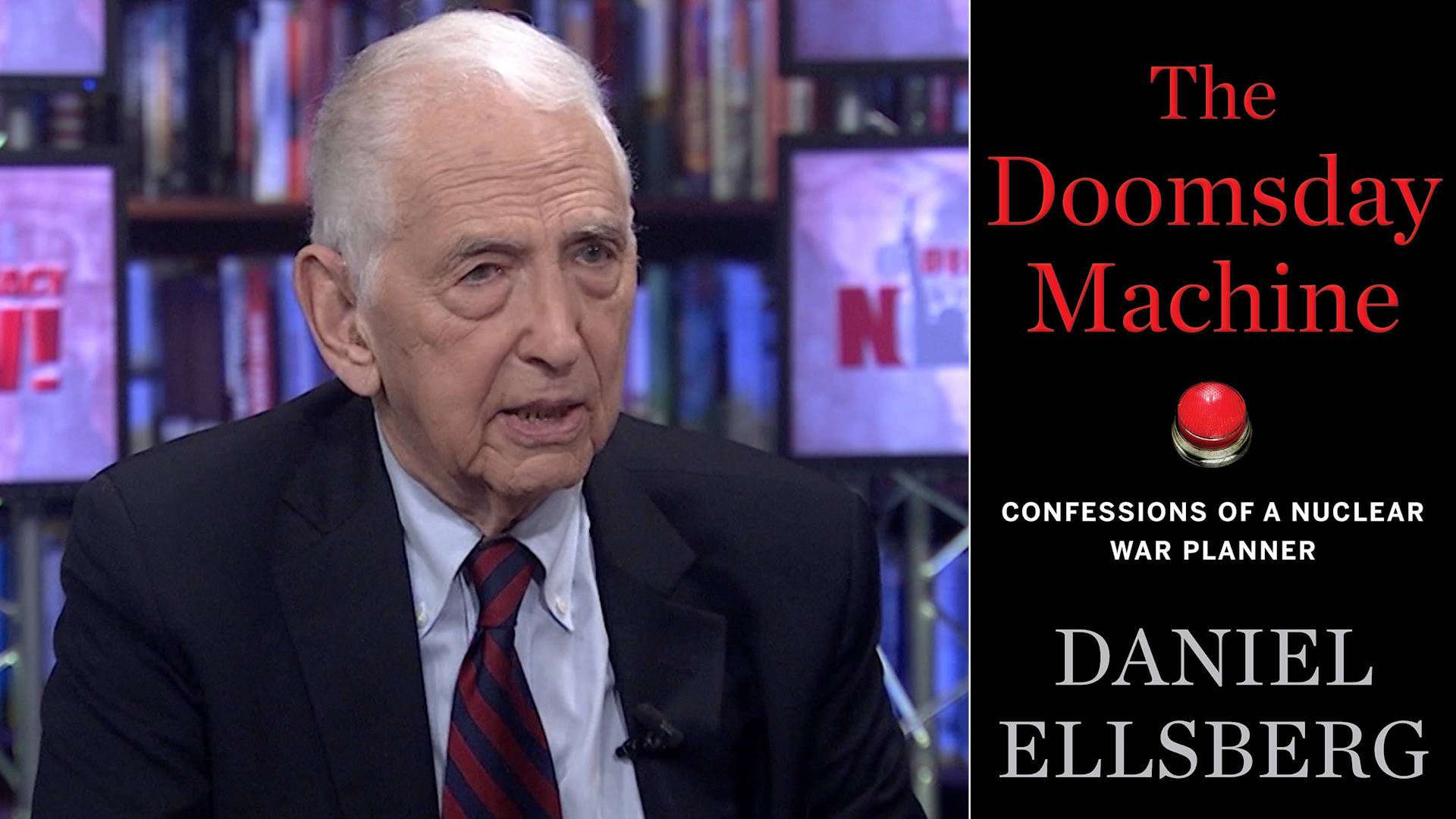

“The Doomsday Machine”: Confessions of Daniel Ellsberg, Former Nuclear War Planner

As we remember Pentagon Papers whistleblower Daniel Ellsberg, who died in June, we look at how he was also a lifelong anti-nuclear activist, stemming from his time working as a nuclear planner for the U.S. government. In December 2017, he joined us to discuss his memoir, The Doomsday Machine...

www.democracynow.org

here was a fun video on neural networks and what is actually powering the mini revolution in computing in recognition and response software... presented by a math professor who does a great job taking complex mathematics and making it digestible for the newcomers

3Blue1Brown

Mathematics with a distinct visual perspective. Linear algebra, calculus, neural networks, topology, and more.

Comrade Ceasefire

Simmer slowly

Maybe, maybe not. Maybe they become more evident and then fade, and then recur.Well natural selection programmed us to be selfish and petty and prone to distraction, fear, flattery, greed, etc but maybe we can do better.

Maybe the genes OXTR, CD38, COMT, DRD4, DRD5, IGF2, and GABRB2 influence altruistic behavior, maybe not. If they do, then they too are subject to evolutionary pressures and hence "natural selection".

Core Imposter

Felon

- Joined

- May 13, 2011

- Messages

- 5,443

Most interesting thread I think I've ever found here. Still on page 5.

Comrade Ceasefire

Simmer slowly

This article bobbed up yesterday and might be of interest in relation to what you were saying. "Messy brains" is a bit of an understatement.Well natural selection programmed us to be selfish and petty and prone to distraction, fear, flattery, greed, etc but maybe we can do better.

Without a 'self' machine 'intelligence' won't need an ego either. It won't need to defend something that doesn't exist and can serve the greater good without envy or self servingness.

Obviously 'the greater good' will be defined by us but ideally an awareness (for lack of a better term) superior to ours can make superior decisions.

AI won't be conscious but why would we want it to be? Because we're lonely, want to talk to our robot kitten?

Consciousness creates problems, better humans use AI tools to handle our own messy brains rather than trying to create new brains.

I think craving for computers to be more than just machine tools is the religious instinct in man, god is dead, but we're building a new daddy, love me daddy, see me, feel feelings w me.

Overexposure Distorted the Science of Mirror Neurons

After a decade out of the spotlight, the brain cells once alleged to explain empathy, autism and theory of mind are being refined and redefined.

The study of mirror neurons is recovering a decade after discourse about the cells’ abilities was warped by scientific and media hype.

Overexposure Distorted the Science of Mirror Neurons | Quanta Magazine

After a decade out of the spotlight, the brain cells once alleged to explain empathy, autism and theory of mind are being refined and redefined.

Similar threads

- Replies

- 73

- Views

- 3K