Driving a racing car requires a tremendous amount of skill (even in a computer game). Now, artificial intelligence has challenged the idea that this skill is exclusive to humans — and it might even change the way automated vehicles are designed.

As complex as the handling limits of a car can be, they are well described by physics, and it therefore stands to reason that they could be calculated or learnt. Indeed, the automated Audi TTS, Shelley, was capable of generating lap times comparable to those of a champion amateur driver by

using a simple model of physics. By contrast, GT Sophy (the computer game version) doesn’t make explicit calculations based on physics. Instead, it learns through a neural-network model. However, given the track and vehicle motion information available to Shelley and GT Sophy, it isn’t too surprising that GT Sophy can put in a fast lap with enough training data.

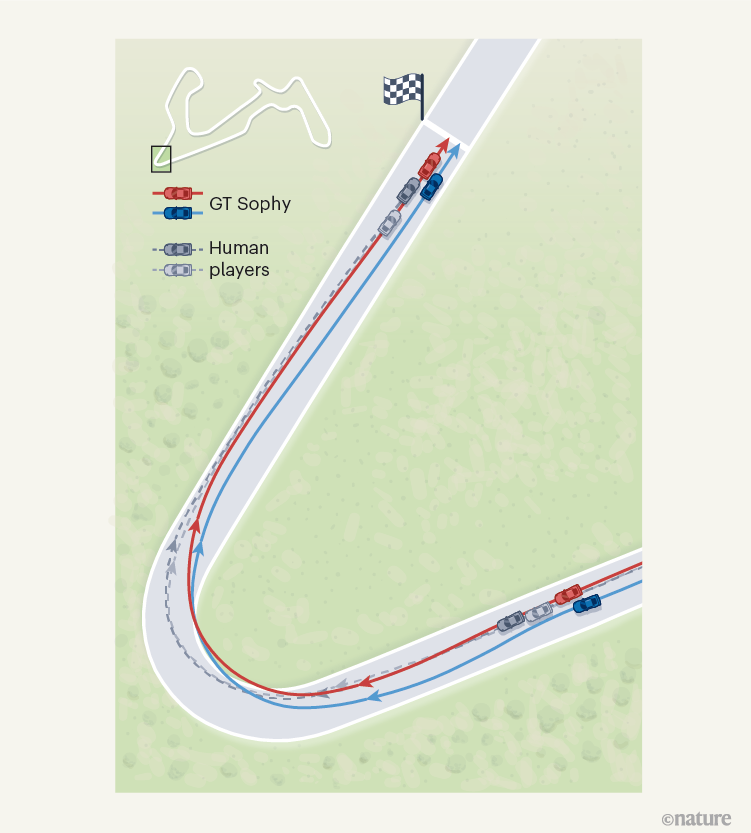

What really stands out is GT Sophy’s performance against human drivers in a head-to-head competition. Far from using a lap-time advantage to outlast opponents, GT Sophy simply outraces them. Through the training process, GT Sophy learnt to take different lines through the corners in response to different conditions. In one case, two human drivers attempted to block the preferred path of two GT Sophy cars, yet the AI succeeded in finding two different trajectories that overcame this block and allowed the AI’s cars to pass (Fig. 1).

GT Sophy also proved to be capable of executing a classic manoeuvre on a simulation of a famous straight of the Circuit de la Sarthe, the track of the car race 24 Hours of Le Mans. The move involves quickly driving out of the wake of the vehicle ahead to increase the drag on the lead car in a bid to overtake it. GT Sophy learnt this trick through training, on the basis of many examples of this exact scenario — although the same could be said for every human racing-car driver capable of this feat. Outracing human drivers so skilfully in a head-to-head competition represents a landmark achievement for AI.

The implications of Wurman and colleagues’ work go well beyond video-game supremacy. As companies work to perfect fully automated vehicles that can deliver goods or passengers, there is an ongoing debate as to how much of the software should use neural networks and how much should be based on physics alone. In general, the neural network is the undisputed champion when it comes to

perceiving and identifying objects in the surrounding environment. However, trajectory planning has remained the province of physics and optimization. Even vehicle manufacturer Tesla, which uses neural networks as the core of autonomous driving, has revealed that its neural networks feed into an optimization-based trajectory planner (see

go.nature.com/3kgkpua). But GT Sophy’s success on the track suggests that neural networks might one day have a larger role in the software of automated vehicles than they do today.

report a neural-network algorithm — called GT Sophy — that is capable of winning against the best human players of the video game

. When two human drivers attempted to block the preferred path of two GT Sophy cars, the algorithm found two ways to overtake them.

.

.