You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The AI Thread

- Thread starter Truthy

- Start date

Ferocitus

Deity

I finally got my computer model of a neuron working, with high-speed communication along the inside of actin microtubules.This really looks beautiful, Ferocitus.

Maybe one day I'll be able to create something just as pretty and useless by linking large numbers of neurons together.

Maybe even my own!

The most high paid/white collar/high tech job to be taken by AI:

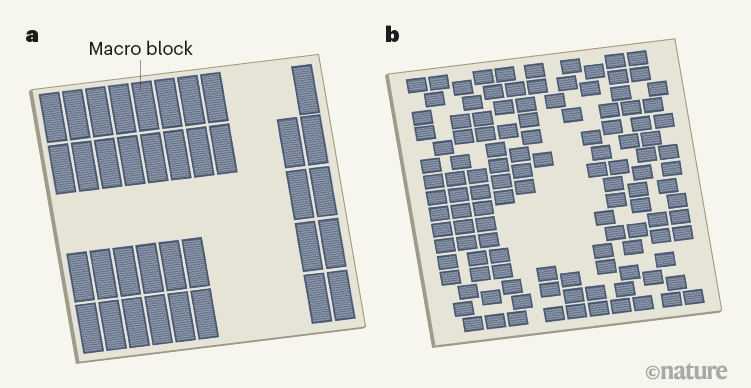

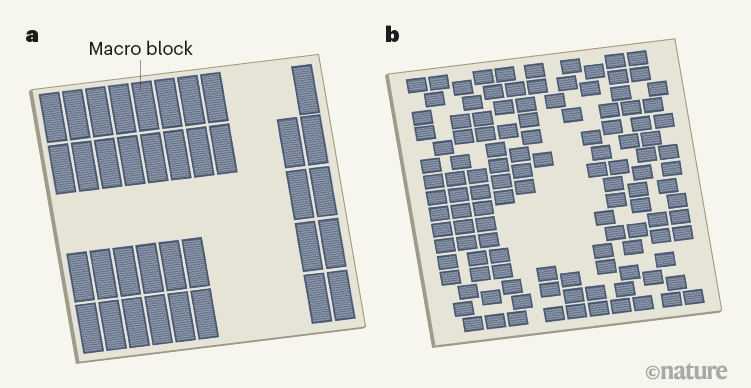

Microchip floorplans designed by humans differ from those produced by a machine-learning system. An early step in microchip design is floorplanning — the placement of memory components called macro blocks on an empty layout canvas. Floorplanning is immensely complicated because of the vast number of potential configurations of macro blocks, and it involves multiple iterations as the logic-circuit design evolves. Each iteration is produced manually by human engineers, over days or weeks. a, This floorplan for a chip (the Ariane RISC-V processor) is considered by human designers to be a good one. Its 37 macro blocks are close-packed in well-aligned rows and columns, leaving an uncluttered area for placement of other components. b, Mirhoseini et al.1 report a machine-learning agent that, in just a few hours, designs floorplans that outperform those designed by humans. This agent-produced arrangement is another implementation of the Ariane processor, and is very different from that shown in a.

Write-up Paper (paywalled) Pre-print

AI system outperforms humans in designing floorplans for microchips

A machine-learning system has been trained to place memory blocks in microchip designs. The system beats human experts at the task, and offers the promise of better, more-rapidly produced chip designs than are currently possible.

Success or failure in designing microchips depends heavily on steps known as floorplanning and placement. These steps determine where memory and logic elements are located on a chip. The locations, in turn, strongly affect whether the completed chip design can satisfy operational requirements such as processing speed and power efficiency. So far, the floorplanning task, in particular, has defied all attempts at automation. It is therefore performed iteratively and painstakingly, over weeks or months, by expert human engineers.

On 22 April 2020, Mirhoseini et al. posted a preprint of the current paper to the online arXiv repository. It stated that “in under 6 hours, our method can generate placements that are superhuman or comparable” — that is, the method can outperform humans in a startlingly short period of time. Within days, numerous semiconductor-design companies, design-tool vendors and academic-research groups had launched efforts to understand and replicate the results.

A machine-learning system has been trained to place memory blocks in microchip designs. The system beats human experts at the task, and offers the promise of better, more-rapidly produced chip designs than are currently possible.

Success or failure in designing microchips depends heavily on steps known as floorplanning and placement. These steps determine where memory and logic elements are located on a chip. The locations, in turn, strongly affect whether the completed chip design can satisfy operational requirements such as processing speed and power efficiency. So far, the floorplanning task, in particular, has defied all attempts at automation. It is therefore performed iteratively and painstakingly, over weeks or months, by expert human engineers.

On 22 April 2020, Mirhoseini et al. posted a preprint of the current paper to the online arXiv repository. It stated that “in under 6 hours, our method can generate placements that are superhuman or comparable” — that is, the method can outperform humans in a startlingly short period of time. Within days, numerous semiconductor-design companies, design-tool vendors and academic-research groups had launched efforts to understand and replicate the results.

Microchip floorplans designed by humans differ from those produced by a machine-learning system. An early step in microchip design is floorplanning — the placement of memory components called macro blocks on an empty layout canvas. Floorplanning is immensely complicated because of the vast number of potential configurations of macro blocks, and it involves multiple iterations as the logic-circuit design evolves. Each iteration is produced manually by human engineers, over days or weeks. a, This floorplan for a chip (the Ariane RISC-V processor) is considered by human designers to be a good one. Its 37 macro blocks are close-packed in well-aligned rows and columns, leaving an uncluttered area for placement of other components. b, Mirhoseini et al.1 report a machine-learning agent that, in just a few hours, designs floorplans that outperform those designed by humans. This agent-produced arrangement is another implementation of the Ariane processor, and is very different from that shown in a.

Write-up Paper (paywalled) Pre-print

Hm, wouldn't something that depends on area used being optimal for components of other type (clearly affected by the previous type) to be placed only later, be algorithm based?

And if not, due to some many-way complication, would an AI be better here not due to actual calculation but because it can react in real time with the effect of a re-arrangement? (eg, a human can tell you if they like more or less of ingredient x in a concoction, in real time; likewise a computer will increase speed in real time).

(very general questions, since I don't know how this works )

)

And if not, due to some many-way complication, would an AI be better here not due to actual calculation but because it can react in real time with the effect of a re-arrangement? (eg, a human can tell you if they like more or less of ingredient x in a concoction, in real time; likewise a computer will increase speed in real time).

(very general questions, since I don't know how this works

)

)

Last edited:

GPT-2 (neural network trained to solve problems in language field) demonstrates the ability to "understand" other modalities it wasn't trained for.

It shows good results on protein folding, numerical computation and image processing tasks.

It shows good results on protein folding, numerical computation and image processing tasks.

https://arxiv.org/abs/2103.05247Pretrained Transformers as Universal Computation Engines

Kevin Lu, Aditya Grover, Pieter Abbeel, Igor Mordatch

We investigate the capability of a transformer pretrained on natural language to generalize to other modalities with minimal finetuning -- in particular, without finetuning of the self-attention and feedforward layers of the residual blocks. We consider such a model, which we call a Frozen Pretrained Transformer (FPT), and study finetuning it on a variety of sequence classification tasks spanning numerical computation, vision, and protein fold prediction. In contrast to prior works which investigate finetuning on the same modality as the pretraining dataset, we show that pretraining on natural language can improve performance and compute efficiency on non-language downstream tasks.

warpus

Sommerswerd asked me to change this

So Uhh.. AI?

What are my thoughts on AI you might ask? In my opinion as soon as we actually are able to create true & proper artificial intelligence, it will cease being artificial and will simply be intelligence.

I mean, if you really think about it, what are we but machines that use a neural net to obtain knowledge and learn how to behave and operate in this world?

What are my thoughts on AI you might ask? In my opinion as soon as we actually are able to create true & proper artificial intelligence, it will cease being artificial and will simply be intelligence.

I mean, if you really think about it, what are we but machines that use a neural net to obtain knowledge and learn how to behave and operate in this world?

Last edited:

warpus

Sommerswerd asked me to change this

Oh my god, I need more freaking coffee

brb posting in right thread

brb posting in right thread

Ferocitus

Deity

AI Winter is Coming!

Hundreds of AI Tools Were Built to Catch Covid. None of Them Helped

At the start of the pandemic, remembers MIT Technology Review's senior editor for AI, the community

"rushed to develop software that many believed would allow hospitals to diagnose or triage patients

faster, bringing much-needed support to the front lines — in theory.

... a review in the British Medical Journal that is still being updated as new tools are released

and existing ones tested. She and her colleagues have looked at 232 algorithms for diagnosing

patients or predicting how sick those with the disease might get. They found that none of them were

fit for clinical use.

...a machine-learning researcher at the University of Cambridge, and his colleagues, and published

in Nature Machine Intelligence. This team zoomed in on deep-learning models for diagnosing covid

and predicting patient risk from medical images, such as chest x-rays and chest computer tomography

(CT) scans. They looked at 415 published tools and, like Wynants and her colleagues, concluded that

none were fit for clinical use.

https://www.technologyreview.com/20...-ai-failed-covid-hospital-diagnosis-pandemic/

Hundreds of AI Tools Were Built to Catch Covid. None of Them Helped

At the start of the pandemic, remembers MIT Technology Review's senior editor for AI, the community

"rushed to develop software that many believed would allow hospitals to diagnose or triage patients

faster, bringing much-needed support to the front lines — in theory.

... a review in the British Medical Journal that is still being updated as new tools are released

and existing ones tested. She and her colleagues have looked at 232 algorithms for diagnosing

patients or predicting how sick those with the disease might get. They found that none of them were

fit for clinical use.

...a machine-learning researcher at the University of Cambridge, and his colleagues, and published

in Nature Machine Intelligence. This team zoomed in on deep-learning models for diagnosing covid

and predicting patient risk from medical images, such as chest x-rays and chest computer tomography

(CT) scans. They looked at 415 published tools and, like Wynants and her colleagues, concluded that

none were fit for clinical use.

https://www.technologyreview.com/20...-ai-failed-covid-hospital-diagnosis-pandemic/

It is pretty bad, but it seems their complaints are more about the selection bias in the training sets and poor reporting than that they are all rubbishAI Winter is Coming!

Hundreds of AI Tools Were Built to Catch Covid. None of Them Helped

At the start of the pandemic, remembers MIT Technology Review's senior editor for AI, the community

"rushed to develop software that many believed would allow hospitals to diagnose or triage patients

faster, bringing much-needed support to the front lines — in theory.

... a review in the British Medical Journal that is still being updated as new tools are released

and existing ones tested. She and her colleagues have looked at 232 algorithms for diagnosing

patients or predicting how sick those with the disease might get. They found that none of them were

fit for clinical use.

...a machine-learning researcher at the University of Cambridge, and his colleagues, and published

in Nature Machine Intelligence. This team zoomed in on deep-learning models for diagnosing covid

and predicting patient risk from medical images, such as chest x-rays and chest computer tomography

(CT) scans. They looked at 415 published tools and, like Wynants and her colleagues, concluded that

none were fit for clinical use.

https://www.technologyreview.com/20...-ai-failed-covid-hospital-diagnosis-pandemic/

This review indicates that almost all pubished [sic] prediction models are poorly reported, and at high risk of bias such that their reported predictive performance is probably optimistic. However, we have identified two (one diagnostic and one prognostic, the Jehi diagnostic model and the 4C mortality score) promising models that should soon be validated in multiple cohorts, preferably through collaborative efforts and data sharing to also allow an investigation of the stability and heterogeneity in their performance across populations and settings.

Ferocitus

Deity

It is pretty bad, but it seems their complaints are more about the selection bias in the training sets and poor reporting than that they are all rubbish

This review indicates that almost all pubished [sic] prediction models are poorly reported, and at high risk of bias such that their reported predictive performance is probably optimistic. However, we have identified two (one diagnostic and one prognostic, the Jehi diagnostic model and the 4C mortality score) promising models that should soon be validated in multiple cohorts, preferably through collaborative efforts and data sharing to also allow an investigation of the stability and heterogeneity in their performance across populations and settings.

Bad reporting and/or over-promising - the same complaints made by James Lighthill in his damning report

that caused nearly all AI research at universities in the UK and the USA to have their funding and grants

stopped.

I've been involved in a related field, Artificial Life Optimisation, for over 30 years and I have read

literally hundreds of papers where the authors describe a method, test it on an obscure set of functions

and then claim that their method is superior to those of their competitors. Eventually, journals refused

to accept those type of papers.

After Covid broke out, dozens of papers have been appearing on arxiv, biorxiv and other repositories,

with the same old unverified claims of superiority. It's just opportunistic hockum.

AI can invent things, legally and down under

An Australian Court has decided that an artificial intelligence can be recognised as an inventor in a patent submission.

Justice Beach said:

The UK, USA and European Union have not chosen to adopt similar logic – and indeed the USA specifies that inventors must be human. However DABUS was granted a patent in South Africa last week, for its food container.

Some think it a bad idea:Justice Beach said:

"… in my view an artificial intelligence system can be an inventor for the purposes of the Act.

"First, an inventor is an agent noun; an agent can be a person or thing that invents. Second, so to hold reflects the reality in terms of many otherwise patentable inventions where it cannot sensibly be said that a human is the inventor. Third, nothing in the Act dictates the contrary conclusion."

The Justice also worried that the Commissioner of Patents' logic in rejecting Thaler's patent submissions was faulty.

"On the Commissioner's logic, if you had a patentable invention but no human inventor, you could not apply for a patent," the judgement states. "Nothing in the Act justifies such a result."

Thaler has filed patent applications around the world in the name of DABUS – a Device for the Autonomous Boot-strapping of Unified Sentience. Among the items DABUS has invented are a food container and a light-emitting beacon."First, an inventor is an agent noun; an agent can be a person or thing that invents. Second, so to hold reflects the reality in terms of many otherwise patentable inventions where it cannot sensibly be said that a human is the inventor. Third, nothing in the Act dictates the contrary conclusion."

The Justice also worried that the Commissioner of Patents' logic in rejecting Thaler's patent submissions was faulty.

"On the Commissioner's logic, if you had a patentable invention but no human inventor, you could not apply for a patent," the judgement states. "Nothing in the Act justifies such a result."

The UK, USA and European Union have not chosen to adopt similar logic – and indeed the USA specifies that inventors must be human. However DABUS was granted a patent in South Africa last week, for its food container.

"Just because patents are (or, at least, can be) good, it does not follow that more patents, generated in more ways, by more entities, must be better," he wrote on his Patentology blog.

"I do not consider the decision … to serve Australia’s interests," Summerfield added. "I think that it represents a form of judicial activism that results in the development of policy – in this case, the important matter of who, or what, can form the basis for the grant of a patent monopoly enforceable against the public at large – from the bench."

Speaking to Guardian Australia, Summerfield raised the prospect of a flood of patents being awarded to machine-generated inventions, creating so many patents that other innovation becomes impossible.

I do not really get the against argument. I am not very into artificial monopolies generated by intellectual property, but if they are going to exist then to restrict certain entities from this protection seems wrong. If it was "one can only get a patent if you did the work" then that would make sense, but it would also exclude big companies who use employees to generate patents, even though this seems to create "a flood of patents" that makes it very hard to innovate any other way. If they are allowed to do so, then why should I not use a computer to come up with the innovation?"I do not consider the decision … to serve Australia’s interests," Summerfield added. "I think that it represents a form of judicial activism that results in the development of policy – in this case, the important matter of who, or what, can form the basis for the grant of a patent monopoly enforceable against the public at large – from the bench."

Speaking to Guardian Australia, Summerfield raised the prospect of a flood of patents being awarded to machine-generated inventions, creating so many patents that other innovation becomes impossible.

Ferocitus

Deity

There are now hundreds of algorithms supposedly inspired by some method of exploration and exploitation

used by animals. Among the first of their type were Genetic Algorithms, Evolution Strategies, and Ant

Colony Optimisation.

In the last 20 years the number of strategies has increased to the point that journals are no longer

accepting papers unless they can be shown, mathematically, to be unique, and not just slight variations

of other established methods.

The list of natural and man-made processes that have inspired such metaheuristic frameworks is huge. Ants,

bees, termites, bacteria, invasive weed, bats, flies, fireflies, fireworks, mine blasts, frogs, wolves,

cats, ****oos, consultants, fish, glow-worms, krill, monkeys, anarchic societies, imperialist societies,

league championships, clouds, dolphins, Egyptian vultures, green herons, flower pollination, roach

infestations, water waves, optics, black holes, the Lorentz transformation, lightning, electromagnetism,

gravity, music making, “intelligent” water drops, river formation, and many, many more, have been used as

the basis of a “novel” metaheuristic technique.

A History of Metaheuristics, Kenneth Sorensen, Marc Sevaux, and Fred Glover, 2017.

Sorensen, in another paper, made the wry observation that: the Gravity Optimisation Technique officially

likes the Intelligent Waterdrop Algorithm on Facebook. That sums up the state of a lot of metaheuristics

and AI for me.

Many of the methods stress the beauty of the animal kingdom's solutions to problems. Why is that deemed to

be important and not some other anthropomorphized aspects?

I've often felt like creating a junk algorithm, maybe something based on an unfortunate woman who is forced

into sex work to support her disabled twins, and who uses a strategy to optimise profits from her "corners".

Why not have algorithms based on "pathos" instead? For me, the field has descended into bathos, so maybe

I should try that as a basis for a method.

Pathos and Bathos - cue Kyriakos to explain their subtleties and why I'm using them incorrectly.

used by animals. Among the first of their type were Genetic Algorithms, Evolution Strategies, and Ant

Colony Optimisation.

In the last 20 years the number of strategies has increased to the point that journals are no longer

accepting papers unless they can be shown, mathematically, to be unique, and not just slight variations

of other established methods.

The list of natural and man-made processes that have inspired such metaheuristic frameworks is huge. Ants,

bees, termites, bacteria, invasive weed, bats, flies, fireflies, fireworks, mine blasts, frogs, wolves,

cats, ****oos, consultants, fish, glow-worms, krill, monkeys, anarchic societies, imperialist societies,

league championships, clouds, dolphins, Egyptian vultures, green herons, flower pollination, roach

infestations, water waves, optics, black holes, the Lorentz transformation, lightning, electromagnetism,

gravity, music making, “intelligent” water drops, river formation, and many, many more, have been used as

the basis of a “novel” metaheuristic technique.

A History of Metaheuristics, Kenneth Sorensen, Marc Sevaux, and Fred Glover, 2017.

Sorensen, in another paper, made the wry observation that: the Gravity Optimisation Technique officially

likes the Intelligent Waterdrop Algorithm on Facebook. That sums up the state of a lot of metaheuristics

and AI for me.

Many of the methods stress the beauty of the animal kingdom's solutions to problems. Why is that deemed to

be important and not some other anthropomorphized aspects?

I've often felt like creating a junk algorithm, maybe something based on an unfortunate woman who is forced

into sex work to support her disabled twins, and who uses a strategy to optimise profits from her "corners".

Why not have algorithms based on "pathos" instead? For me, the field has descended into bathos, so maybe

I should try that as a basis for a method.

Pathos and Bathos - cue Kyriakos to explain their subtleties and why I'm using them incorrectly.

TheMeInTeam

If A implies B...

- Joined

- Jan 26, 2008

- Messages

- 27,995

How the hell do we stop deepfakes from ending audio/video evidence as a concept? Soon anything is capable of being convincingly faked if they can do voices now too. If the fakes are good enough I imagine scenario where a Trump like person will just literally kill somebody on camera in broad daylight and claim it is a deepfake video and get away with it.

Or more likely the other way around, making it appear people did things they did not.

A corpse will be real, we cannot fake them yet.

"Find me a man, and I'll find you his crime". A corpse is as real as the right people say it is. Though more practically, what will be "found" probably won't be a corpse, but rather "evidence" or "admission" of some other wrongdoing. Or perhaps the corpse is the man himself, after he "convincingly threatened" whoever made him a corpse.

They probably mean intelligence not created by humans, however artificial intelligence nowdays is often only partly created by humans. Seems just a question of time before it have surpassed humans in everything, the abilities it can achieve today is impressive.

In some fields it already has. It's a more immediate problem than runaway general AI, most likely. Threat from runaway general AI is non-zero, but we are already seeing automation/machine algorithms outperform people at a wide variety of tasks, and that number grows every year. What does the workforce look like when all driving and most office work is done by machines? We won't be post-scarcity yet, but the % of unemployable people will be drastically higher than any point in history. I don't know exactly what that will look like when it manifests in society, but it probably won't be good. I wish as a species that more of us see this coming sooner and plan for how to handle it in a way that doesn't result in starvation, civil wars, or other desperate acts. Or at least reduces those.

Is the gist of the idea used for current AI to have the AI find some way to program for itself that which would make it react to some lesson in the manner (supposedly) indented (also intended, but I prefer indented)? That is what I get from the articles, however I want to ask why access by a human to that computer-programming-for-itself code is not the norm.

Human involvement is pretty common at some points. But human involvement doesn't guarantee AI alignment to human goals. It only helps a little. Main issue being an actual general AI would deceive humans, and would likely be good at it.

One thing I don't see emphasized a lot in setting up AI goals is that, to my knowledge, we haven't actually mapped aggregate human utility functions, or even a single human's utility function in total. Yet if we're to make a general AI that does what we want it to do, don't we HAVE to do that? Even in broad terms, it seems pretty hard to make something do what you want when you don't actually know what you want. If it's just "make an AI that's good at StarCraft" or "attain the most casualties at a given price constraint at war", then yeah we can define those goals for a specialized AI.

But for a general AI? What IS "intended", consistently? If we're making one of those, coherency of utilities/standards is no longer something I'm asking for to demonstrate flaws in an argument. In the case of a general AI, you either do that...or you die.

^I am not sure if it is even possible to do that... If it was to be done in a similar manner to commercial game AI, it would require mapping a number of things so as to create a coded metaphor of them in the computer language - which would be easy, but very primitive and limited, which would allow for loopholes. I don't know if you could even force a kind of "prime directive" in a non-primitive way

A problem is that no human forms ethical considerations in the exact same way (I'd guess that no level of intelligence consists of the exact same mental connections in a human, so the analogue in a machine would have to be more limited, or dangerously loose).

A problem is that no human forms ethical considerations in the exact same way (I'd guess that no level of intelligence consists of the exact same mental connections in a human, so the analogue in a machine would have to be more limited, or dangerously loose).

TheMeInTeam

If A implies B...

- Joined

- Jan 26, 2008

- Messages

- 27,995

I'm not sure it's possible either, but it doesn't seem like the sort of thing we should let general AI figure out on its own  .

.

.

.Saw the following, today (from Quanta magazine).

She mentions GEB, and analogy-making. Also claims that current AI research has reached or is reaching a limit - which is why she suggests fusing it with a different approach.

Not that analogy-making is itself possible for AI without a) having a powerful enough low level (ala neurons) and b) coming up with a rather ingenious way to just mimic the elasticity (but with maintaining the poignant nature) of analogy-making in actual humans.

An analogy is its own world, and can then become the primary world and have secondary analogy-worlds attached to it whether those meta-analogies are examined in relation to the old primary world or not. While this may be doable on the surface, for AI, I wouldn't suspect that it is also doable to attach levels of significance with something analogous to the human sense of self; so the meta worlds will just be another main world.

She mentions GEB, and analogy-making. Also claims that current AI research has reached or is reaching a limit - which is why she suggests fusing it with a different approach.

Not that analogy-making is itself possible for AI without a) having a powerful enough low level (ala neurons) and b) coming up with a rather ingenious way to just mimic the elasticity (but with maintaining the poignant nature) of analogy-making in actual humans.

An analogy is its own world, and can then become the primary world and have secondary analogy-worlds attached to it whether those meta-analogies are examined in relation to the old primary world or not. While this may be doable on the surface, for AI, I wouldn't suspect that it is also doable to attach levels of significance with something analogous to the human sense of self; so the meta worlds will just be another main world.

Apple to use AI to look for kiddie porn on your phone, but what else will they look for?

Apple is about to announce a new technology for scanning individual users' iPhones for banned content. While it will be billed as a tool for detecting child abuse imagery, its potential for misuse is vast based on details entering the public domain.

Rather than using age-old hash-matching technology, Apple's new tool – due to be announced today along with a technical whitepaper, we are told – will use machine learning techniques to identify images of abused children.

Governments in the West and authoritarian regions alike will be delighted by this initiative. What's to stop China (or some other censorious regime such as Russia or the UK) from feeding images of wanted fugitives into this technology and using that to physically locate them?

Rather than using age-old hash-matching technology, Apple's new tool – due to be announced today along with a technical whitepaper, we are told – will use machine learning techniques to identify images of abused children.

Governments in the West and authoritarian regions alike will be delighted by this initiative. What's to stop China (or some other censorious regime such as Russia or the UK) from feeding images of wanted fugitives into this technology and using that to physically locate them?

Similar threads

- Replies

- 15

- Views

- 2K

- Replies

- 16

- Views

- 1K