Nice piece in the NYT today suggesting that AI has been overhyped, with this nice especially nice line in it:

I suspect they may have not tried to use it for anything it is good for. Here is a recent article about people who find value in it, even if the work needs human input.

Why mathematics is set to be revolutionized by AI

Giving birth to a conjecture — a proposition that is suspected to be true, but needs definitive proof — can feel to a mathematician like a moment of divine inspiration. Mathematical conjectures are not merely educated guesses. Formulating them requires a combination of genius, intuition and experience. Even a mathematician can struggle to explain their own discovery process. Yet, counter-intuitively, I think that this is the realm in which machine intelligence will initially be most transformative.

In 2017, researchers at the London Institute for Mathematical Sciences, of which I am director, began applying machine learning to mathematical data as a hobby. During the COVID-19 pandemic, they discovered that simple artificial intelligence (AI) classifiers can predict an elliptic curve’s rank — a measure of its complexity. Elliptic curves are fundamental to number theory, and understanding their underlying statistics is a crucial step towards solving one of the seven Millennium Problems, which are selected by the Clay Mathematics Institute in Providence, Rhode Island, and carry a prize of US$1 million each. Few expected AI to make a dent in this high-stakes arena.

AI has made inroads in other areas, too. A few years ago, a computer program called the Ramanujan Machine produced new formulae for fundamental constants, such as π and e. It did so by exhaustively searching through families of continued fractions — a fraction whose denominator is a number plus a fraction whose denominator is also a number plus a fraction and so on. Some of these conjectures have since been proved, whereas others remain open problems.

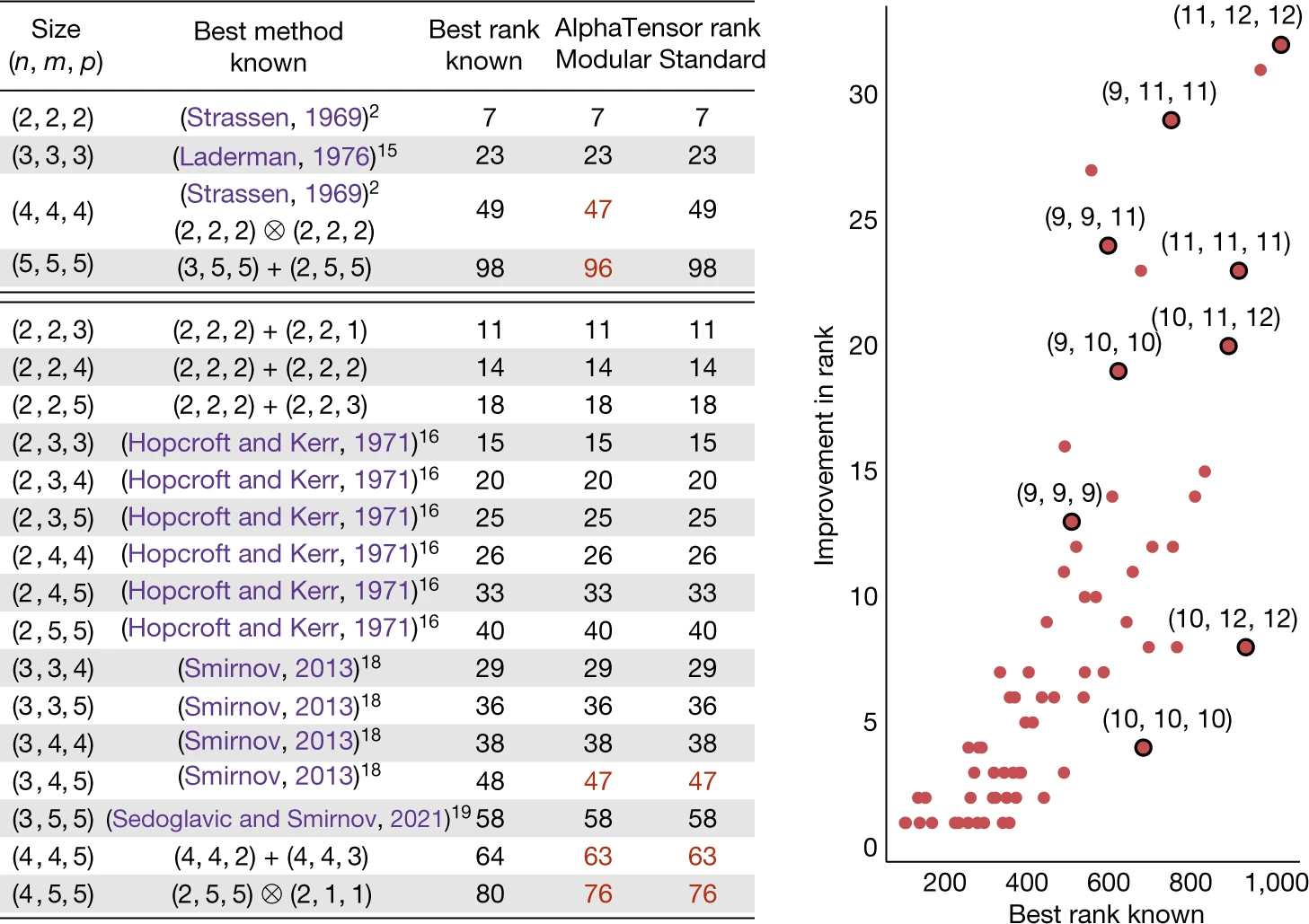

Another example pertains to knot theory, a branch of topology in which a hypothetical piece of string is tangled up before the ends are glued together. Researchers at Google DeepMind, based in London, trained a neural network on data for many different knots and discovered an unexpected relationship between their algebraic and geometric structures.

How has AI made a difference in areas of mathematics in which human creativity was thought to be essential?

First, there are no coincidences in maths. In real-world experiments, false negatives and false positives abound. But in maths, a single counterexample leaves a conjecture dead in the water. For example, the Pólya conjecture states that most integers below any given integer have an odd number of prime factors. But in 1960, it was found that the conjecture does not hold for the number 906,180,359. In one fell swoop, the conjecture was falsified.

Second, mathematical data — on which AI can be trained — are cheap. Primes, knots and many other types of mathematical object are abundant. The On-Line Encyclopedia of Integer Sequences (OEIS) contains almost 375,000 sequences — from the familiar Fibonacci sequence (1, 1, 2, 3, 5, 8, 13, ...) to the formidable Busy Beaver sequence (0, 1, 4, 6, 13, …), which grows faster than any computable function. Scientists are already using machine-learning tools to search the OEIS database to find unanticipated relationships.

AI can help us to spot patterns and form conjectures. But not all conjectures are created equal. They also need to advance our understanding of mathematics. In his 1940 essay A Mathematician’s Apology, G. H. Hardy explains that a good theorem “should be one which is a constituent in many mathematical constructs, which is used in the proof of theorems of many different kinds”. In other words, the best theorems increase the likelihood of discovering new theorems. Conjectures that help us to reach new mathematical frontiers are better than those that yield fewer insights. But distinguishing between them requires an intuition for how the field itself will evolve. This grasp of the broader context will remain out of AI’s reach for a long time — so the technology will struggle to spot important conjectures.

But despite the caveats, there are many upsides to wider adoption of AI tools in the maths community. AI can provide a decisive edge and open up new avenues for research.

Mainstream mathematics journals should also publish more conjectures. Some of the most significant problems in maths — such as Fermat’s Last Theorem, the Riemann hypothesis, Hilbert’s 23 problems and Ramanujan’s many identities — and countless less-famous conjectures have shaped the course of the field. Conjectures speed up research by pointing us in the right direction. Journal articles about conjectures, backed up by data or heuristic arguments, will accelerate discovery.

Last year, researchers at Google DeepMind predicted 2.2 million new crystal structures. But it remains to be seen how many of these potential new materials are stable, can be synthesized and have practical applications. For now, this is largely a task for human researchers, who have a grasp of the broad context of materials science.

Similarly, the imagination and intuition of mathematicians will be required to make sense of the output of AI tools. Thus, AI will act only as a catalyst of human ingenuity, rather than a substitute for it.