@Samson , about the +- presentation, another thought of how it can be presented using not just the real number line but distance (absolute value). So Xo is used as a pivot point to max/min x, which turns it (in this world) into an analogue of 0. For the special case, Xo is 0. I included also one use within secondary education math. Other uses include limits (by just having an inequality instead of an equality).

Last edited:

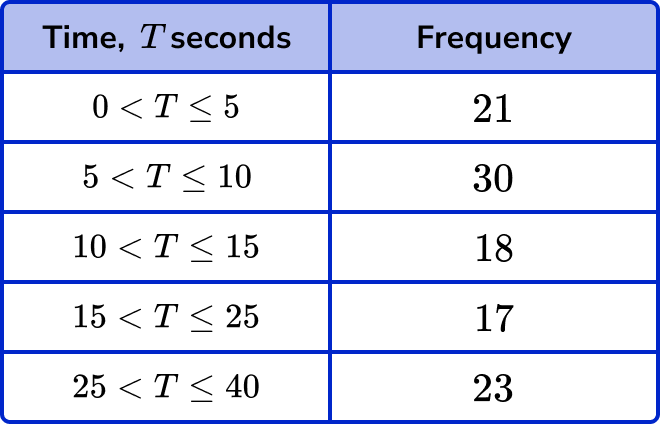

Eg

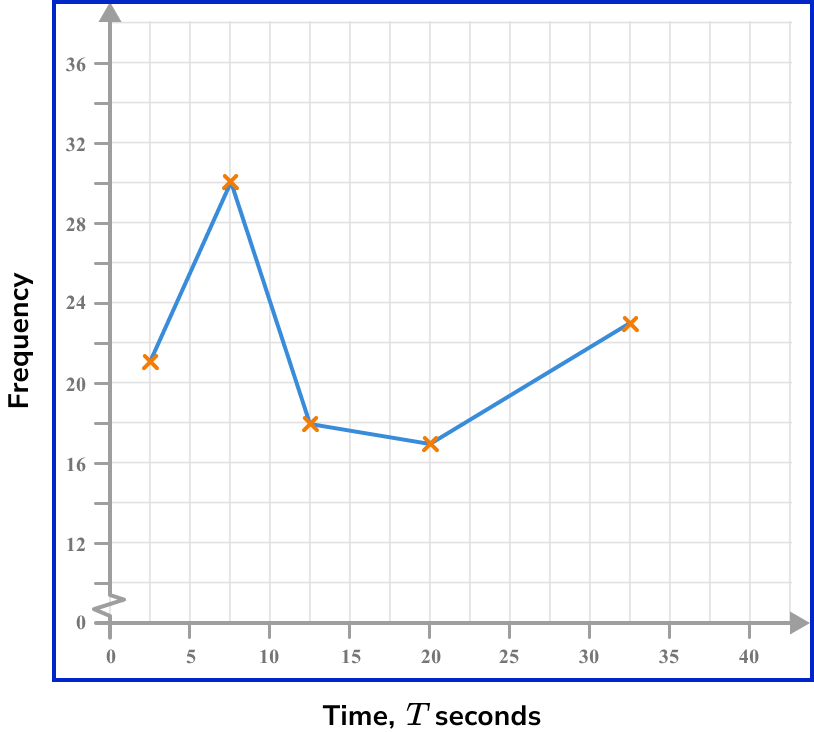

Eg