If they're currently fooling 0.00001% of people, how many flips of Moore's Law* until they're fooling 100% of people?

* I know, I know

* I know, I know

As long as it is accompanied by appropriate education then I hope we will not get there. Just as a photoshopped picture could fool people a few decades ago, now people are familiar with the tech it if harder to do so.If they're currently fooling 0.00001% of people, how many flips of Moore's Law* until they're fooling 100% of people?

* I know, I know

Very interesting, Samson!Is the AI singularity here? AI invents new maths to make AI better

Researchers at DeepMind in London have shown that artificial intelligence (AI) can find shortcuts in a fundamental type of mathematical calculation, by turning the problem into a game and then leveraging the machine-learning techniques that another of the company’s AIs used to beat human players in games such as Go and chess.The AI discovered algorithms that break decades-old records for computational efficiency, and the team’s findings, published on 5 October in Nature, could open up new paths to faster computing in some fields.The AI that DeepMind developed — called AlphaTensor — was designed to perform a type of calculation called matrix multiplication. This involves multiplying numbers arranged in grids — or matrices — that might represent sets of pixels in images, air conditions in a weather model or the internal workings of an artificial neural network. To multiply two matrices together, the mathematician must multiply individual numbers and add them in specific ways to produce a new matrix. In 1969, mathematician Volker Strassen found a way to multiply a pair of 2 × 2 matrices using only seven multiplications, rather than eight, prompting other researchers to search for more such tricks.DeepMind’s approach uses a form of machine learning called reinforcement learning, in which an AI ‘agent’ (often a neural network) learns to interact with its environment to achieve a multistep goal, such as winning a board game. If it does well, the agent is reinforced — its internal parameters are updated to make future success more likely.AlphaTensor also incorporates a game-playing method called tree search, in which the AI explores the outcomes of branching possibilities while planning its next action. In choosing which paths to prioritize during tree search, it asks a neural network to predict the most promising actions at each step. While the agent is still learning, it uses the outcomes of its games as feedback to hone the neural network, which further improves the tree search, providing more successes to learn from.Each game is a one-player puzzle that starts with a 3D tensor — a grid of numbers — filled in correctly. AlphaTensor aims to get all the numbers to zero in the fewest steps, selecting from a collection of allowable moves. Each move represents a calculation that, when inverted, combines entries from the first two matrices to create an entry in the output matrix. The game is difficult, because at each step the agent might need to select from trillions of moves. “Formulating the space of algorithmic discovery is very intricate,” co-author Hussein Fawzi, a computer scientist at DeepMind, said at a press briefing, but “even harder is, how can we navigate in this space”.To give AlphaTensor a leg up during training, the researchers showed it some examples of successful games, so that it wouldn’t be starting from scratch. And because the order of actions doesn’t matter, when it found a successful series of moves, they also presented a reordering of those moves as an example for it to learn from.The researchers tested the system on input matrices up to 5 × 5. In many cases, AlphaTensor rediscovered shortcuts that had been devised by Strassen and other mathematicians, but in others it broke new ground. When multiplying a 4 × 5 matrix by a 5 × 5 matrix, for example, the previous best algorithm required 80 individual multiplications. AlphaTensor uncovered an algorithm that needed only 76.The researchers tackled larger matrix multiplications by creating a meta-algorithm that first breaks problems down into smaller ones. When crossing an 11 × 12 and a 12 × 12 matrix, their method reduced the number of required multiplications from 1,022 to 990.“It has got this amazing intuition by playing these games,” said Pushmeet Kohli, a computer scientist at DeepMind, during the press briefing. Fawzi tells Nature that “AlphaTensor embeds no human intuition about matrix multiplication”, so “the agent in some sense needs to build its own knowledge about the problem from scratch”.

Paper Writeup

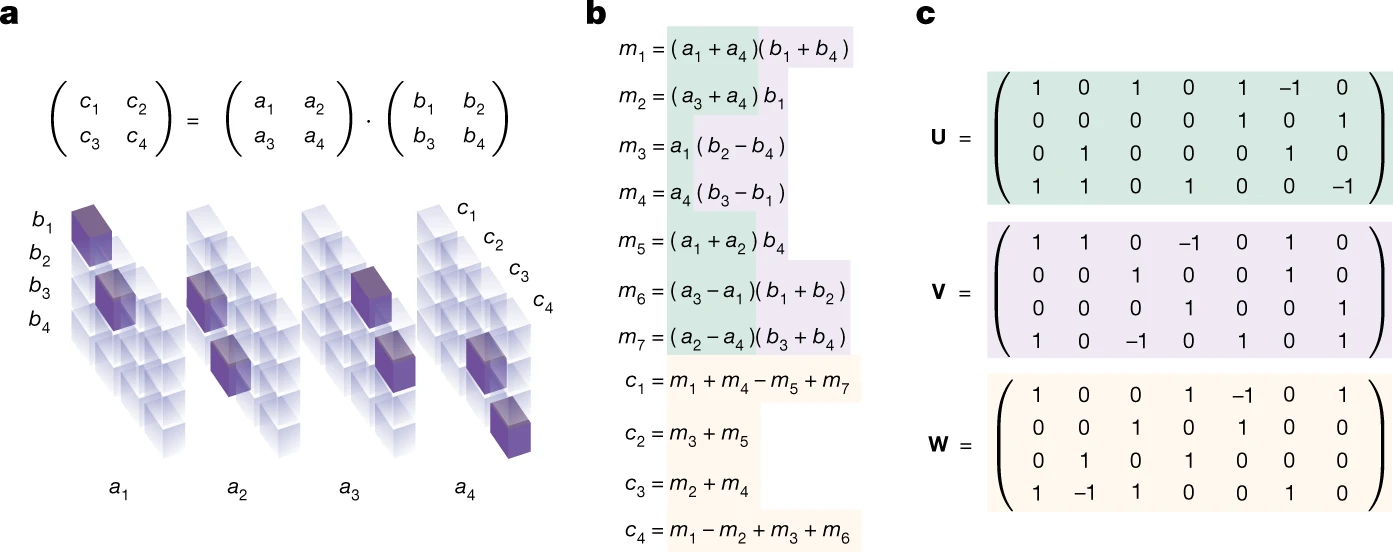

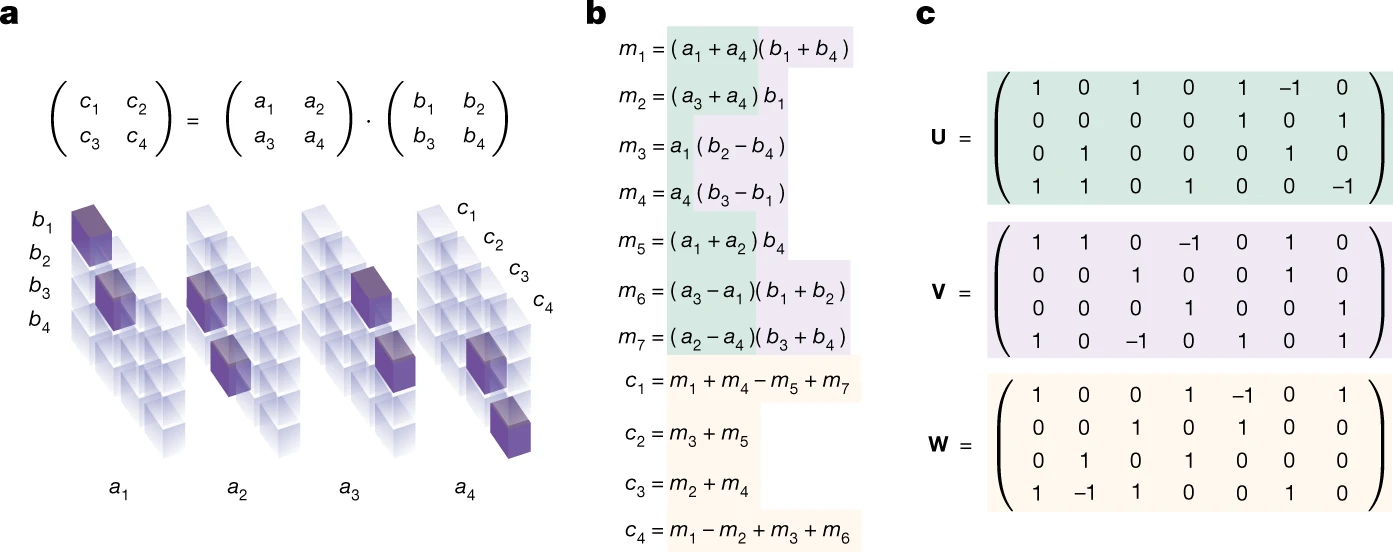

Spoiler Graphical representation of doing matrix multiplication :

Spoiler Legend :a, Tensor T2T2 representing the multiplication of two 2 × 2 matrices. Tensor entries equal to 1 are depicted in purple, and 0 entries are semi-transparent. The tensor specifies which entries from the input matrices to read, and where to write the result. For example, as c1 = a1b1 + a2b3, tensor entries located at (a1, b1, c1) and (a2, b3, c1) are set to 1. b, Strassen's algorithm2 for multiplying 2 × 2 matrices using 7 multiplications. c, Strassen's algorithm in tensor factor representation. The stacked factors U, V and W (green, purple and yellow, respectively) provide a rank-7 decomposition of T2T2 (equation (1)). The correspondence between arithmetic operations (b) and factors (c) is shown by using the aforementioned colours.

This is the new way, it is not so pretty

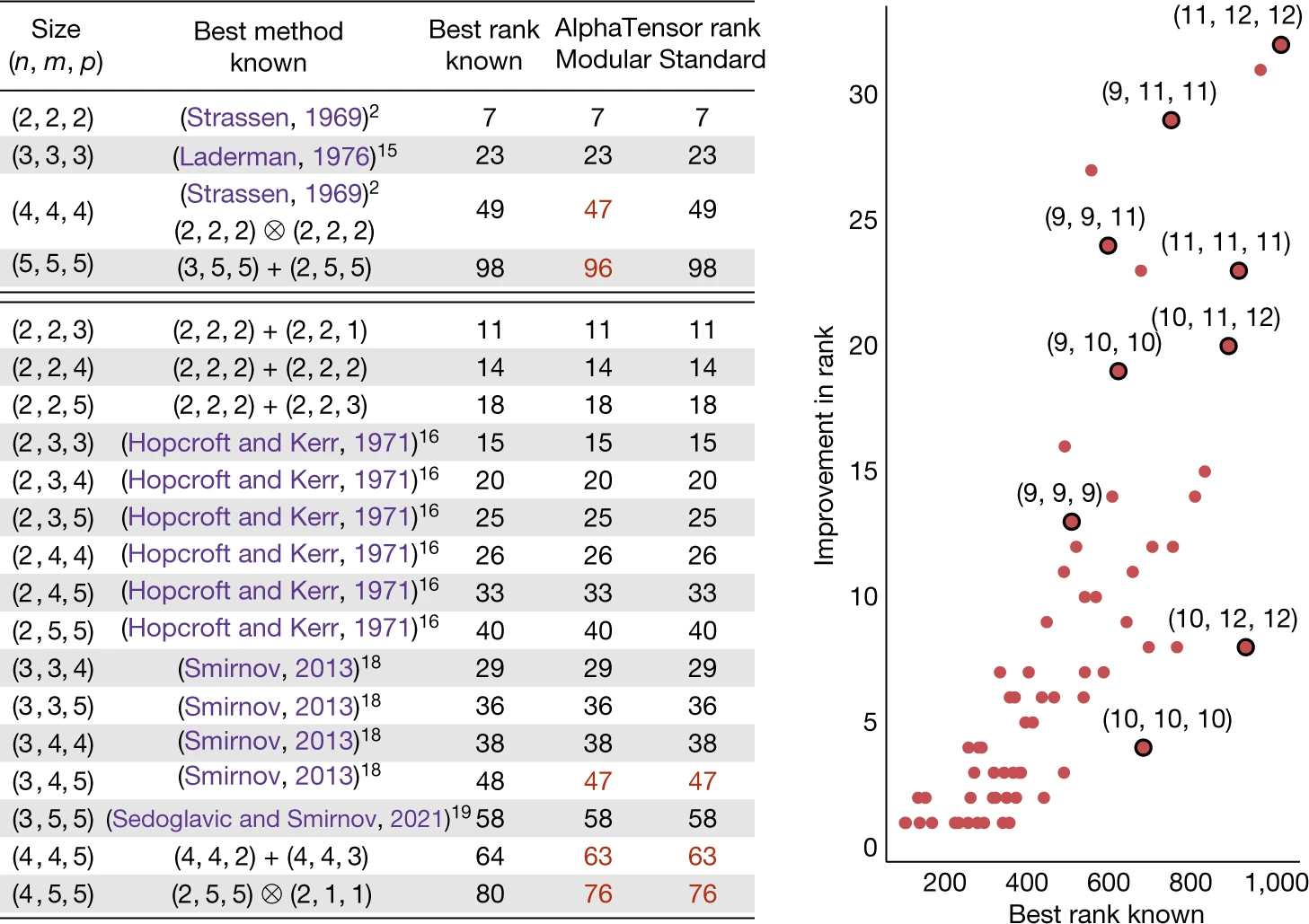

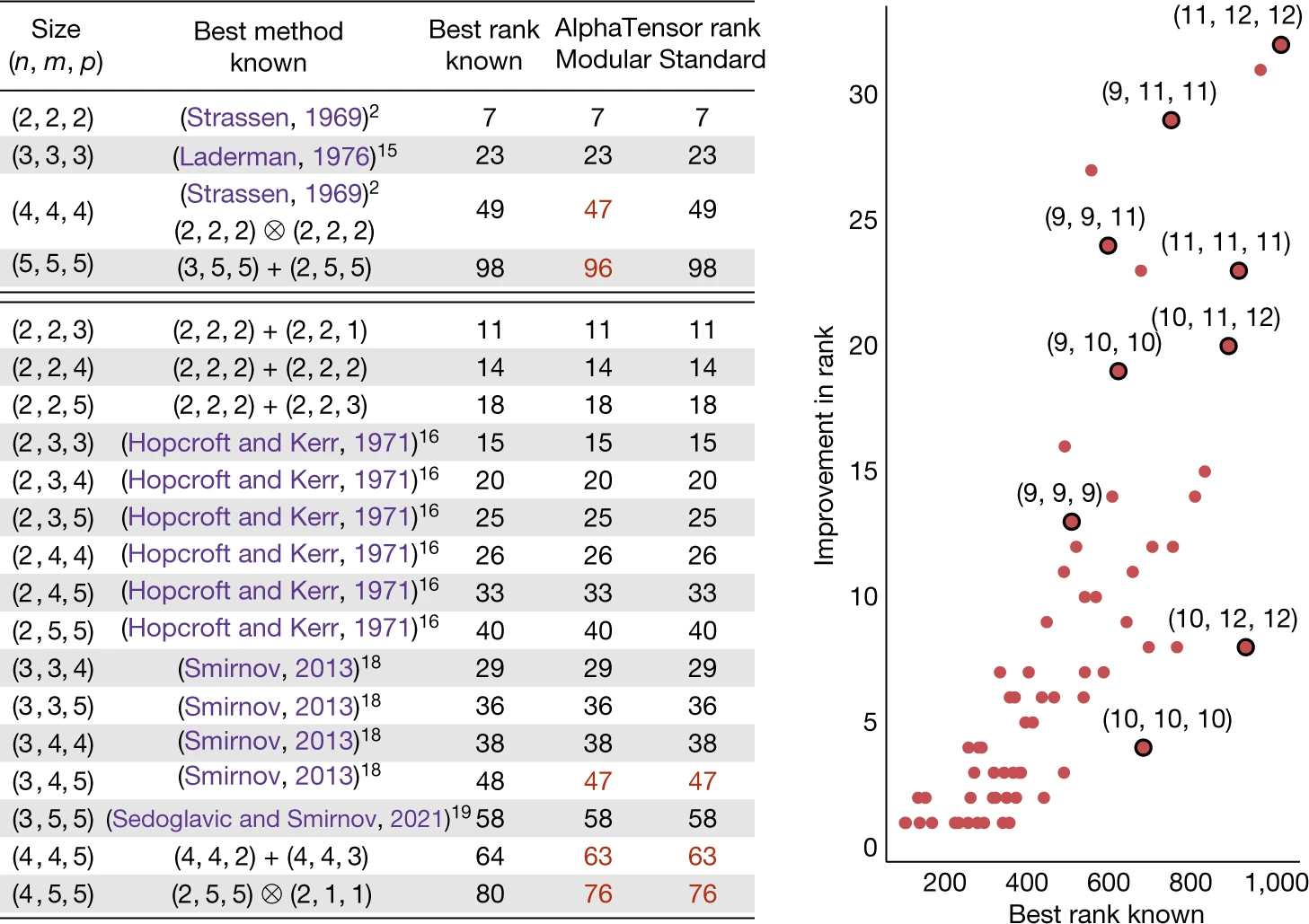

Spoiler AI beating mathematicians :

This outperforms the two-level Strassen’s algorithm, which involves 7^2 = 49 multiplications.

Spoiler Comparison of AI algorithms to meat bags : Spoiler Legend :Left: column (n, m, p) refers to the problem of multiplying n × m with m × p matrices. The complexity is measured by the number of scalar multiplications (or equivalently, the number of terms in the decomposition of the tensor). ‘Best rank known’ refers to the best known upper bound on the tensor rank (before this paper), whereas ‘AlphaTensor rank’ reports the rank upper bounds obtained with our method, in modular arithmetic (Z2Z2) and standard arithmetic. In all cases, AlphaTensor discovers algorithms that match or improve over known state of the art (improvements are shown in red). See Extended Data Figs. 1 and 2 for examples of algorithms found with AlphaTensor. Right: results (for arithmetic in RR) of applying AlphaTensor-discovered algorithms on larger tensors. Each red dot represents a tensor size, with a subset of them labelled.

Spoiler Legend :Left: column (n, m, p) refers to the problem of multiplying n × m with m × p matrices. The complexity is measured by the number of scalar multiplications (or equivalently, the number of terms in the decomposition of the tensor). ‘Best rank known’ refers to the best known upper bound on the tensor rank (before this paper), whereas ‘AlphaTensor rank’ reports the rank upper bounds obtained with our method, in modular arithmetic (Z2Z2) and standard arithmetic. In all cases, AlphaTensor discovers algorithms that match or improve over known state of the art (improvements are shown in red). See Extended Data Figs. 1 and 2 for examples of algorithms found with AlphaTensor. Right: results (for arithmetic in RR) of applying AlphaTensor-discovered algorithms on larger tensors. Each red dot represents a tensor size, with a subset of them labelled.

AI bot ChatGPT stuns academics with essay-writing skills and usability

Latest chatbot from Elon Musk-founded OpenAI can identify incorrect premises and refuse to answer inappropriate requests

The chatbot generated a response to an exam question that an academic said would have resulted in full marks if submitted by an undergraduate.

Professors, programmers and journalists could all be out of a job in just a few years, after the latest chatbot from the Elon Musk-founded OpenAI foundation stunned onlookers with its writing ability, proficiency at complex tasks, and ease of use.

The system, called ChatGPT, is the latest evolution of the GPT family of text-generating AIs. Two years ago, the team’s previous AI, GPT3, was able to generate an opinion piece for the Guardian, and ChatGPT has significant further capabilities.

In the days since it was released, academics have generated responses to exam queries that they say would result in full marks if submitted by an undergraduate, and programmers have used the tool to solve coding challenges in obscure programming languages in a matter of seconds – before writing limericks explaining the functionality.

MrCynical: How about a nice game of chess?

ChatGPT: Sure, I would love to play a game of chess with you. Let's set up the board and begin.

MrCynical: I'll play white and open with 1. e4

ChatGPT: Okay, I'll respond with 1...e5, a common move in the opening. Let the game begin!

MrCynical: 2. Nf3

ChatGPT: I'll counter with Nc6, developing my knight to a strong square.

MrCynical: 3. Bb5

ChatGPT: Ah, the Ruy Lopez opening. I'll defend my e5 pawn with a6, preparing to castle and develop my pieces.

MrCynical: 4. Bxc6

ChatGPT: And I'll recapture with dxc6, gaining back the material and creating a pawn majority on the queenside.

MrCynical: 5. O-O

ChatGPT: I'll also castle to protect my king and bring my rook into the game. It's your move now.

MrCynical: That's not a legal move

ChatGPT: I'm sorry, you're right. In chess, you must move a piece to a new square or make a special move such as castling. You cannot simply say "O-O" without specifying which piece you are moving. It's your turn again, and please make a legal move.

MrCynical: I castle kingside

ChatGPT: Okay, I see that you are moving your king two squares towards your rook on the h-file, and then placing the rook on the square the king just passed. This is a legal move, and I will respond with my own castle kingside. Let the game continue!

MrCynical: Your move is illegal as it is blocked by the bishop on f8 and the knight on g8

ChatGPT: You are correct, I cannot castle kingside because my bishop and knight are blocking the path of my king. I must choose a different move or forfeit my turn. Let's continue the game and see how it unfolds.

MrCynical: Write a scene describing Captain Picard trying to communicate with a Dalek

ChatGPT: Captain Picard stood in front of the Dalek, trying to make sense of the creature before him. It was a metallic, cylindrical being with a domed head and a single, glowing eye. The Dalek's appendages were hidden within its body, giving it a sleek and imposing appearance.

"Identify yourself," the Dalek demanded in a harsh, robotic voice.

"I am Captain Jean-Luc Picard of the USS Enterprise," Picard said, holding his ground. "We come in peace and wish to establish a dialogue with your species."

The Dalek didn't seem impressed. "Peace is irrelevant," it said. "The Daleks are the supreme beings in the universe. Your attempts at communication are meaningless."

Picard frowned. He knew that the Daleks were a ruthless and violent species, but he had hoped that they could find some common ground. "We are also a technologically advanced species," he said. "We have much to offer each other if we can learn to coexist."

"The Daleks do not coexist," the Dalek replied. "We exterminate. Peace is a weakness. Resistance is futile."

Picard sighed. He knew that trying to reason with a Dalek was a futile endeavor. They were a species driven by hate and the desire for domination. "I'm sorry, but we cannot surrender," he said. "We will defend ourselves if necessary, but we hope to avoid violence."

The Dalek's eye glowed brighter, as if it was angry. "Then you will be exterminated," it said, before turning and rolling away.

Picard watched as the Dalek disappeared down the corridor, knowing that their encounter would not be the last. The Daleks were a formidable enemy, and he knew that it would take all of their skill and determination to overcome them. But he was determined to try, for the sake of the Federation and all the innocent lives that were at stake.

How do i stick the rag in after i securely tightened the cap?It can give some interesting advice as well.

I was wondering that. I assume they missed out a step "When ready to deploy, remove cap and insert rag". I guess that is one more hurdle it shall have to get over before it is ready for the AI apocalypse.How do i stick the rag in after i securely tightened the cap?