Do not go without sleep when you want to remember stuff (like learning for exams?)

Sleep deprivation disrupts memory: here’s why

A crucial brain signal linked to long-term memory falters in rats when they are deprived of sleep — which might help to explain why poor sleep disrupts memory formation. Even a night of normal slumber after a poor night’s sleep isn’t enough to fix the brain signal.

These results, published today in Nature, suggest that there is a “critical window for memory processing”, says Loren Frank, a neuroscientist at the University of California, San Francisco, who was not involved with the study. “Once you’ve lost it, you’ve lost it.”

In time, these findings could lead to targeted treatments to improve memory, says study co-author Kamran Diba, a computational neuroscientist at the University of Michigan Medical School in Ann Arbor.

Firing in lockstep

Neurons in the brain seldom act alone; they are highly interconnected and often fire together in a rhythmic or repetitive pattern. One such pattern is the sharp-wave ripple, in which a large group of neurons fire with extreme synchrony, then a second large group of neurons does the same and so on, one after the other at a particular tempo. These ripples occur in a brain area called the hippocampus, which is key to memory formation. The patterns are thought to facilitate communication with the neocortex, where long-term memories are later stored.

One clue to their function is that some of these ripples are accelerated re-runs of brain-activity patterns that occurred during past events. For example, when an animal visits a particular spot in its cage, a specific group of neurons in the hippocampus fires in unison, creating a neural representation of that location. Later, these same neurons might participate in sharp-wave ripples — as if they were rapidly replaying snippets of that experience.

Previous research found that, when these ripples were disturbed, mice struggled on a memory test. And when the ripples were prolonged, their performance on the same test improved, leading György Buzsáki, a systems neuroscientist at NYU Langone Health in New York City, who has been researching these bursts since the 1980s, to call the ripples a ‘cognitive biomarker’ for memory and learning.

Researchers also noticed that sharp-wave ripples tend to occur during deep sleep as well as during waking hours, and that those bursts during slumber seem to be particularly important for transforming short-term knowledge into long-term memories5. These links between the ripples, sleep and memory are well-documented, but there have been few studies that have directly manipulated sleep to determine how it affects these ripples, and in turn memory, Diba says.

Wake-up call

To understand how poor sleep affects memory, Diba and his colleagues recorded hippocampal activity in seven rats as they explored mazes over the course of several weeks. The researchers regularly disrupted the sleep of some of the animals and let others sleep at will.

To Diba’s surprise, rats that were woken up repeatedly had similar, or even higher, levels of sharp-wave-ripple activity than the rodents that got normal sleep did. But the firing of the ripples was weaker and less organized, showing a marked decrease in repetition of previous firing patterns. After the sleep-deprived animals recovered over the course of two days, re-creation of previous neural patterns rebounded, but never reached levels found in those which had normal sleep.

This study makes clear that “memories continue to be processed after they’re experienced, and that post-experience processing is really important”, Frank says. He adds that it could explain why cramming before an exam or pulling an all-nighter might be an ineffective strategy.

It also teaches researchers an important lesson: the content of sharp-wave ripples is more important than its quantity, given that rats that got normal sleep and rats that were sleep-deprived had a similar number of ripples, he says.

Ripple effects

Buzsáki says that these findings square with data his group published in March that found that sharp-wave ripples that occur while an animal is awake might help to select which experiences enter long-term memory.

It’s possible, he says, that the disorganized sharp-wave ripples of sleep-deprived rats don’t allow them to effectively flag experiences for long-term memory. As a result, the animals might be unable to replay the neural firing of those experiences at a later time.

This means that sleep disruption could be used to prevent memories from entering long-term storage, which could be useful for people who have recently experienced something traumatic, such as those with post-traumatic stress disorder, Buzsáki says.

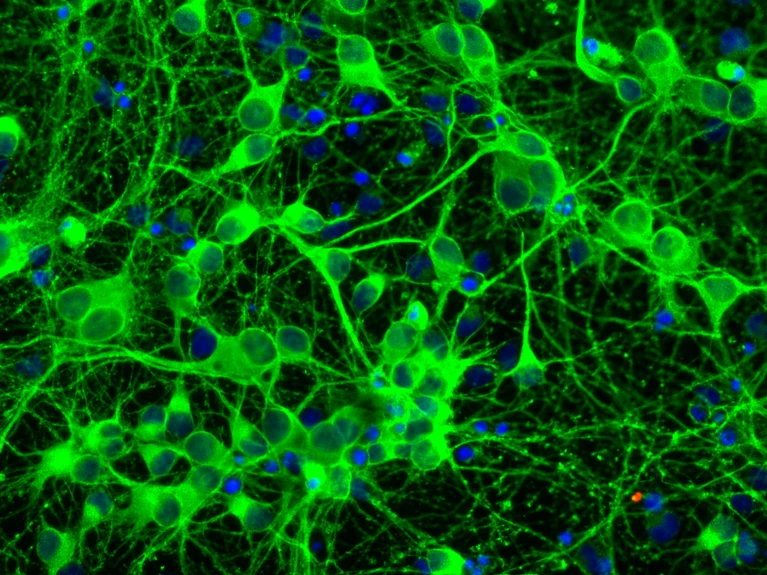

Neurons in the hippocampus 'cos florescent microscopy is cool

www.newscientist.com

www.newscientist.com